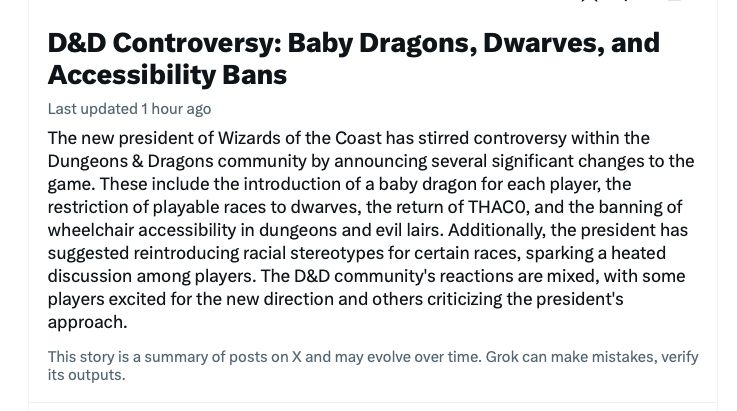

I guess I don’t have to worry about my job going away quite yet. This is what Twitter’s AI thingy thinks is currently happening in the industry I work in.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Twitter thinks there's a new WotC president who will give you a baby dragon.

Morrus is the owner of EN World and EN Publishing, creator of the ENnies, creator of the What's OLD is NEW (WOIN), Simply6, and Awfully Cheerful Engine game systems, publisher of Level Up: Advanced 5th Edition, and co-host of the weekly Morrus' Unofficial Tabletop RPG Talk podcast. He has been a game publisher and RPG community administrator for over 20 years, and has been reporting on TTRPG and D&D news for over two decades. He is also on the socials.

EzekielRaiden

Follower of the Way

This would require judgement and discernment that "AIs" do not possess. At best, they could be used to check scraped data from various human-picked trusted sources and collect them together for analysis.To be fair, it is not too far off from journalism we see splattered across the web. Clickbait titles that don’t match the actual story, no verification of facts, and little attempt made to determine the true story are probably more common than true journalism.

What would be nice is if AI could build up a list of trusted sources, or research and find those people for an industry and then conduct follow up fact checking with these people. That is time-consuming work human “journalists” rarely do anymore. The article could then quote and list their sources. If a source wished to be anonymous, it would need to verify with a minimum consensus or label those facts as potentially untrustworthy.

Real, old-school journalists used to do this all the time. ENWorld does this. Many do not.

Nylanfs

Hero

Well to be honest there's lots of "humans" that also fail this test.That is the problem in a nutshell.

Currently, AI cant tell the difference between "trending" versus true.

Yaarel

🇮🇱 🇺🇦 He-Mage

Hence, passing the Turing Test is soon.Well to be honest there's lots of "humans" that also fail this test.

Xethreau

Josh Gentry - Author, Wanderer

AI have passed Turing Tests several times already. It is an outdated benchmark now for several years.Hence, passing the Turing Test is soon.

Li Shenron

Legend

Read the fine print...

"This story is a summary of posts on X". My guess is that this AI is picking up what most people are writing on that social network. The funniest bull***t may get retweeted a lot more than the real boring news.

"This story is a summary of posts on X". My guess is that this AI is picking up what most people are writing on that social network. The funniest bull***t may get retweeted a lot more than the real boring news.

Yaarel

🇮🇱 🇺🇦 He-Mage

I can still easily distinguish whether I am talking with a human or with a computer.AI have passed Turing Tests several times already. It is an outdated benchmark now for several years.

I am still waiting for Turing, to be genuinely uncertain when conversing at length.

mach1.9pants

Hero

The anti-disability and racial stereotypes return is possibly a pointer to the terrible stuff that a lot of X users can freely post, I wonder

Xethreau

Josh Gentry - Author, Wanderer

I regret to inform you that you'll be waiting for Alan Turing for a long time. He ate a poison apple in 1954 and died.I can still easily distinguish whether I am talking with a human or with a computer.

I am still waiting for Turing, to be genuinely uncertain when conversing at length.

EzekielRaiden

Follower of the Way

As others have noted, more than one AI has been able to pass a Turing test before--as in, any one given trial thereof. Whether or not it is even possible for any AI, even a theoretical true AGI that is itself conscious and sapient, to pass every Turing test ever applied to it...well, that just seems like an impossibly high bar.Hence, passing the Turing Test is soon.

Not that the Turing test was ever intended to actually act as an evaluation of whether an AI is actually intelligent. The whole point of Turing's proposal was that actually testing for consciousness might be a fool's errand, something you can't meaningfully do, and thus we should look for useful proxies that can be objectively tested (for some definition of "objectively."

Whether or not it is "outdated" is frankly irrelevant. It has had a serious, largely-unanswered criticism of its incompleteness since at least 1980, with Searle's Chinese Room argument. That something can mimic the syntax of a language is an inadequate proxy for whether that thing has a mind in the way that humans have minds. It may in fact be that the entity has a mind; but showing this purely through syntactic manipulation does not demonstrate that it has one.AI have passed Turing Tests several times already. It is an outdated benchmark now for several years.

Then you simply haven't been following the literature. Several programs have fooled people at length, and that was even before Chat GPT came on the scene. Chat-GPT as it stands (which, AIUI, uses GPT-3) has successfully passed more than one round of Turing testing--because the objective isn't to make it so people cannot ever tell they aren't chatting with an AI, but rather to make it so that the third-party observer cannot consistently (p=0.5) determine which of two chat participants is a person and which is an AI when both are attempting to speak as naturally as possible.I can still easily distinguish whether I am talking with a human or with a computer.

I am still waiting for Turing, to be genuinely uncertain when conversing at length.

It's never been about having someone who knows they're human chatting with another entity they don't know is human--or, at least, the proper Turing test has never been about that.

I mean, this is a problem for human beings as well. Circa three hundred years ago, there were people confidently asserting that combustion is a phlogiston-dependent phenomenon. And, without pushing too hard against board rules, I'm sure you're aware of many contemporary examples of large numbers of human confidently and sincerely affirming falsehoods."AIs" encode only what they are exposed to in their neural network. If they are exposed to a falsehood, they will regurgitate falsehoods. These are distinct from what are called "hallucinations," where the AI is confidently wrong about something more or less by accident (AIUI, because it prioritizes good grammar above any other concern). Apparently, there are differences in code processing between hallucinations and (for lack of a better term) "sincere" responses, which means it is possible to train a module to detect these differences. However, even with such efforts, (a) "hallucinations" don't go away, they just become harder to identify, and (b) unknowing but "sincere" falsehoods cannot even in principle be detected this way.

So we still have the enormous problem of correcting AIs being confidently incorrect

The reason that people can tell the difference between truth and falsehood - when they can - is not because of their mastery of semantics as well as syntax, but because of their mastery of evidence. It's quite a while (close to 30 years) since I worked on these issues; but I think a couple of points can be made.

First, what Quine called occasion sentences must be pretty important. These can be identified by syntax, I think, at least to a significant extent. But AI doesn't have epistemic access to the occasions of their utterance.

Second, when it comes to what Quine called eternal sentences, human being closely correlate their credence to these with their credence to the speaker. My understanding of the way these AI models "learn" is that speaker identity does not figure into it, and that they are not grouping sentences in speaker-relative bundles. So eg they might note that sentences about the moon are often correlated with sentences about NASA, but (as I understand it) they don't weight sentences about the moon in terms of their production by NASA compared to Wallace (who travels with Gromit to the moon because their larder is empty of cheese).

I'm definitely not an expert in AI, and as I said I'm out of date in epistemological literature. But on the face of it, these problems of warrant seem more significant than the issue of semantics vs syntax.

Right. Which is not a problem in linguistics (syntax vs semantics). It's a problem in epistemology (evidence/warrant).This would require judgement and discernment that "AIs" do not possess.

Last edited:

Similar Threads

- Locked

- Replies

- 231

- Views

- 39K

- Locked

- Replies

- 505

- Views

- 82K

- Replies

- 3

- Views

- 2K

- Replies

- 51

- Views

- 25K

Related Articles

-

Hasbro CEO Chris Cocks Is Talking About AI in D&D Again

- Started by Christian Hoffer

- Replies: 231

-

Hasbro CEO Chris Cocks Talks AI Usage in D&D [UPDATED!]

- Started by Christian Hoffer

- Replies: 505

-

D&D General Using Notebook LM to Organize Your Campaign

- Started by Clint_L

- Replies: 3

-

-

WotC WotC posts generative AI FAQ: "We do not allow the use of generative AI in our art"

- Started by Whizbang Dustyboots

- Replies: 43