After it was revealed this week that one of the artists for Bigby Presents: Glory of the Giants used artificial intelligence as part of their process when creating some of the book's images, Wizards of the Coast has made a short statement via the D&D Beyond Twitter (X?) account.

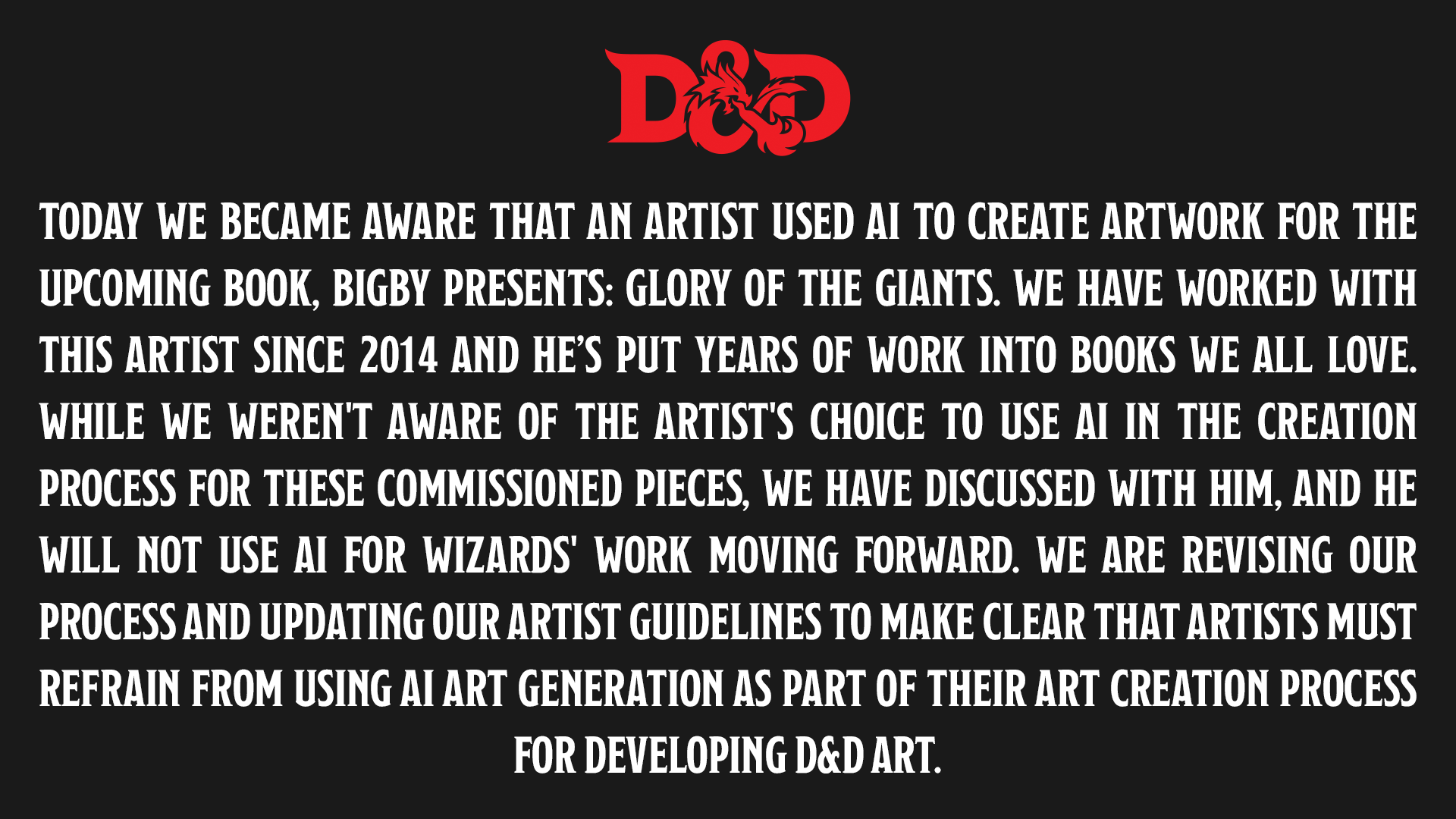

The statement is in image format, so I've transcribed it below.

Ilya Shkipin, the artist in question, talked about AI's part in his process during the week, but has since deleted those posts.

The statement is in image format, so I've transcribed it below.

Today we became aware that an artist used AI to create artwork for the upcoming book, Bigby Presents: Glory of the Giants. We have worked with this artist since 2014 and he's put years of work into book we all love. While we weren't aware of the artist's choice to use AI in the creation process for these commissioned pieces, we have discussed with him, and he will not use AI for Wizards' work moving forward. We are revising our process and updating our artist guidelines to make clear that artists must refrain from using AI art generation as part of their art creation process for developing D&D art.

-Wizards of the Coast

-Wizards of the Coast

Ilya Shkipin, the artist in question, talked about AI's part in his process during the week, but has since deleted those posts.

There is recent controversy on whether these illustrations I made were ai generated. AI was used in the process to generate certain details or polish and editing. To shine some light on the process I'm attaching earlier versions of the illustrations before ai had been applied to enhance details. As you can see a lot of painted elements were enhanced with ai rather than generated from ground up.

-Ilya Shlipin

-Ilya Shlipin