They could know how they work, but they chose not to document and regression test after each new data point was included. It was their choice to do it this way so that they could get it done quicker. It would take a tremendous amount of time and they are more interested in beating their competitors to the market than the advancement of human knowledge

Again, this is not true in all cases. In some cases, yes, for proprietary reasons, companies don't divulge either the data used, initial parameters, and/or the model architecture. But for other cases, we simply don't know how it works, only that it does through experimentation. It's not just that data scientists

won't tell you how their architecture, it's that they are

unable to tell you (even if they wanted to). This is all the more true once you start getting into the big leagues, with LLM's having millions of feature parameters trained on petabytes of data.

Also, traditional regression testing

does not work for most machine learning predictions. Why? Because most QA done today relies on deterministic answers. For example, given input A, I

always expect output B. Much of machine learning is really statistics on steroids.

At best, you can tell if the model architecture is predicting better than some other model (or even the same model with tweaked initial starting weights, training epochs, learning rates, etc). It is an active area of research on how to best QA test machine learning.

I do agree however that companies are rushing headlong into more training and not taking the time to truly understand how their models are working. That's why that petition was sent asking for a moratorium to take time for better inference and explainabilty techniques to be developed in the data science community. How many times do we have to play matches with technology and not consider "unforeseen consequences"?

We do not have the tools to build a functioning brain a neuron at a time, but we do have that ability with these algorithms. We could be discovering how the evolution of consciousness functions by watching a brain be built one neuron at a time, but they would rather try to make fat stacks of cash instead.

Sorry, but that's just not going to happen...at least until we get quantum computers, then probably. It's also questionable why we would need or want to "recreate" a human brain (it would be an imperfect model of our own brain, and may not be necessary for true AGI).

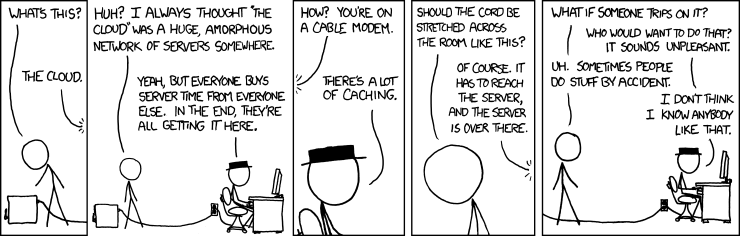

People don't know

how much compute power it takes to train these models. Everyone thinks that Cloud Compute is infinite,

but it isnt [link to a pdf]. People also usually don't talk about the gathering and cleaning of data for the training but that can also be prohibitively expensive (I have seen spark cluster jobs that cost millions per

week). In my experience at work, sometimes you simply can't get on-demand instances and certain machine types (especially GPU instances) are in high demand, so spot instance types are out the door. So scaling up to human brain levels of neural connections is tractably not feasible with our current tech.

Quantum computers on the other hand, thanks to superposition of quantum bits, act as massively parallel processors and can solve all instructions simultaneously. A single 64 qubit register quantum computer will effectively be as powerful as 2^64 64 bit computers (that's 2 raised to the 64th power...that's huge). Granted, I'm not factoring in anything IO bound (eg, access to memory), but still. There's also

renewed interest in analog computers due to certain advantages they have specifically for machine learning.

Also, don't be fooled by the term "neural network" and assume they really are like our own neurons. Some researchers, like neurosurgeon David Hammerhoff and the esteemed mathematician Sir Roger Penrose

think our neurons have a mechanism to operate at least on some level via quantum mechanics. As the eminent physicist Richard Feynman proved, our classical computers can simulate (albeit very slowly) everything a quantum computer can except one: nonlocality. If our brains work at some kind of quantum mechanical level, our classical computers wont be able to fully

emulate our minds. This does not however mean they can't achieve their own form of intelligence. It just would not necessarily be like ours, even if we could have the same number of artificial perceptrons as our human brains have.

This has been my huge bone of contention with other so-called Computer Science experts saying that LLMs are not AGI and don't "think", "understand" or have "true" intelligence. They are all comparing our computers to how our brains work, but 1) we don't know how our own intelligence works (we can't even properly

define intelligence) and 2) AGI doesn't have to think like we do. For #2, jet airplanes don't have to flap their wings to fly like birds do, so why does AGI have to have the same kind of intelligence as our own mind/brain?

Lastly, the view of consciousness as deriving from the brain (ie, an epiphenomenon) is only one school of thought. The truth is, we don't know how consciousness is formed, though there's ideas aplenty. As I mentioned earlier, perhaps consciousness requires a quantum mechanical aspect...or maybe not. Would we even know that AGI (whether through LLM's or something else) is conscious? We can't detect it in our selves, so how could we do it with machines?

www.vice.com

www.scientificamerican.com