Ryujin

Legend

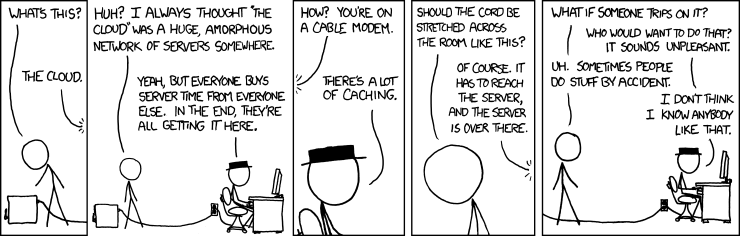

Shades of "The IT Crowd" and the internet box.

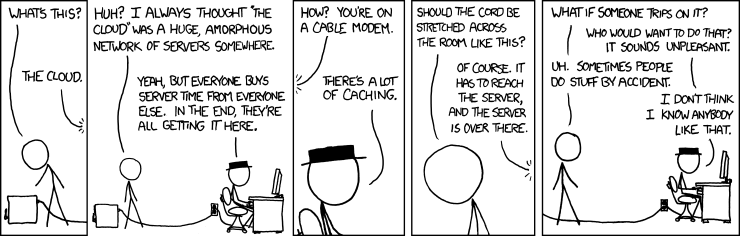

There's planned downtime every night when we turn on the Roomba and it runs over the cord

Shades of "The IT Crowd" and the internet box.

There's planned downtime every night when we turn on the Roomba and it runs over the cord

Again, this is not true in all cases. In some cases, yes, for proprietary reasons, companies don't divulge either the data used, initial parameters, and/or the model architecture. But for other cases, we simply don't know how it works, only that it does through experimentation. It's not just that data scientists won't tell you how their architecture, it's that they are unable to tell you (even if they wanted to). This is all the more true once you start getting into the big leagues, with LLM's having millions of feature parameters trained on petabytes of data.

Also, traditional regression testing does not work for most machine learning predictions. Why? Because most QA done today relies on deterministic answers. For example, given input A, I always expect output B. Much of machine learning is really statistics on steroids.

At best, you can tell if the model architecture is predicting better than some other model (or even the same model with tweaked initial starting weights, training epochs, learning rates, etc). It is an active area of research on how to best QA test machine learning.

I do agree however that companies are rushing headlong into more training and not taking the time to truly understand how their models are working. That's why that petition was sent asking for a moratorium to take time for better inference and explainabilty techniques to be developed in the data science community. How many times do we have to play matches with technology and not consider "unforeseen consequences"?

Sorry, but that's just not going to happen...at least until we get quantum computers, then probably. It's also questionable why we would need or want to "recreate" a human brain (it would be an imperfect model of our own brain, and may not be necessary for true AGI).

People don't know how much compute power it takes to train these models. Everyone thinks that Cloud Compute is infinite, but it isnt [link to a pdf]. People also usually don't talk about the gathering and cleaning of data for the training but that can also be prohibitively expensive (I have seen spark cluster jobs that cost millions per week). In my experience at work, sometimes you simply can't get on-demand instances and certain machine types (especially GPU instances) are in high demand, so spot instance types are out the door. So scaling up to human brain levels of neural connections is tractably not feasible with our current tech.

Quantum computers on the other hand, thanks to superposition of quantum bits, act as massively parallel processors and can solve all instructions simultaneously. A single 64 qubit register quantum computer will effectively be as powerful as 2^64 64 bit computers (that's 2 raised to the 64th power...that's huge). Granted, I'm not factoring in anything IO bound (eg, access to memory), but still. There's also renewed interest in analog computers due to certain advantages they have specifically for machine learning.

Also, don't be fooled by the term "neural network" and assume they really are like our own neurons. Some researchers, like neurosurgeon David Hammerhoff and the esteemed mathematician Sir Roger Penrose think our neurons have a mechanism to operate at least on some level via quantum mechanics. As the eminent physicist Richard Feynman proved, our classical computers can simulate (albeit very slowly) everything a quantum computer can except one: nonlocality. If our brains work at some kind of quantum mechanical level, our classical computers wont be able to fully emulate our minds. This does not however mean they can't achieve their own form of intelligence. It just would not necessarily be like ours, even if we could have the same number of artificial perceptrons as our human brains have.

This has been my huge bone of contention with other so-called Computer Science experts saying that LLMs are not AGI and don't "think", "understand" or have "true" intelligence. They are all comparing our computers to how our brains work, but 1) we don't know how our own intelligence works (we can't even properly define intelligence) and 2) AGI doesn't have to think like we do. For #2, jet airplanes don't have to flap their wings to fly like birds do, so why does AGI have to have the same kind of intelligence as our own mind/brain?

Lastly, the view of consciousness as deriving from the brain (ie, an epiphenomenon) is only one school of thought. The truth is, we don't know how consciousness is formed, though there's ideas aplenty. As I mentioned earlier, perhaps consciousness requires a quantum mechanical aspect...or maybe not. Would we even know that AGI (whether through LLM's or something else) is conscious? We can't detect it in our selves, so how could we do it with machines?

Depends on the model architecture. Reinforcement learning may update weights based on past experience. There's also bayesian weights that have conditional probabilities and some other architectures that can have stochastic weights/biases.Shouldn't it's responses remain the same until additional training data is added? I would assume we don't want these models incorporating everything it experiences into it's memory or else it will become garbage really fast.

I believe the problem here is that adding layers isn't linear. One of the courses I took showed this very interestingly. The instructor is a big advocate of experimentation, so some of the exercises were to tweak various things, like weights, training epochs, learning rates or even the number of hidden layers. He's also a big advocate of visualizing results (made easy with matplotlib or plotly on jupyter notebooks). What was interesting was that in some cases, you would see the cost functions plateau, and just by adding a single layer, you would see a dramatic increase.After adding in each new small set of training data, we should really be reverifying functionality. I understand this would take massive teams and years to accomplish, but what we gain in knowledge and understanding of evolving systems and emergent behavior would be tremendous.

Ah I gotcha now. I would agree with that.Sorry if my analogy was confusing. I meant that we would learn about how intelligence emerges from increasing complexity. I was going off your explanation of it being similar to the neurons in a human brain. To try to understand how these models work when it's already so complex is like trying to understand our brain and consciousness now. It is much easier to understand it as we build it.

Yes, interesting in theory, but I'm not sure there's a way they can detect it. I've only read some articles on some of Chalmer's work, but one of the more interesting takes on consciousness/mind/intelligence is still from Hofstadter's excellent 40+ year old book, Godel, Escher Bach: An Eternal Golden Braid. The idea that self-recursion can cause consciousness is pretty interesting.While interesting, there doesn't seem to be any evidence that there is quantum phenomenon in the microtubules of our brains. I think consciousness arises from sufficient complexity

I've only read some articles on some of Chalmer's work, but one of the more interesting takes on consciousness/mind/intelligence is still from Hofstadter's excellent 40+ year old book, Godel, Escher Bach: An Eternal Golden Braid. The idea that self-recursion can cause consciousness is pretty interesting.

100% agree.The human race does not truly want to know how consciousness is formed. We are too attached to our religious beliefs and hamstrung by our fear of death that we will try to come up with all sorts of reasons why it has to be something special. If we realized that the only difference between us and other life forms was that we created language to allow us to understand abstract concepts, we would look back at ourselves as monsters for all we have done.

I'm not against AI, but if we can't understand why it does what it does it is basically the blind leading the blind. I don't want us all to fall into a pit.

I'll have to take a look at Le Ton Beau de Marot then. GEB was very fascinating, and I read it my senior year of Computer Science. I should go back and read it again. I do recall Hofstadter being a little harsh on Zen (though I wish he called it Chan, because Chan is older than Zen).To this day, GEB remains one of the best, and most thought-provoking, books I have ever read.

I also have a soft spot for Le Ton beau de Marot (same author, 1997), because it really made me think about the issues of frame of reference; such as the idea of how you can translate wordplay (like a pun) from one language to another, and even what it means to "translate" something. Which is, well, something that I've been thinking about given the use of AIs to translate, and what underlies it.

They literally regurgitate whole passages of text, while exhibiting problematic biases caused by what they’re fed to “learn” and how, and they get stuff wrong very often in ways that any kid would be able to avoid.EDIT: I found the quote I was trying to reply to

That's at best, a gross over simplification, and at worse, flat out wrong. Truth is, we don't exactly understand how LLM's work, and that makes them all the more frightening. These Large Language Models don't just rearrange words and regurgitate content. Not only are the computer scientists not even really sure how LLM's are capable of doing what they are doing, some even question if LLM's "understand" how they are doing what they are doing.

So I am with you in the sense that we need to put a hold on AI, but for a totally different reason that I will explain later. I don't think generative AI with RNNs (Recurrent Neural Networks) or NLP (Natural Language Processing) through Transformer algorithms like BERT, LLama or GPT are just plagiarizers. I do believe they "learn". Is it stealing for a human to study the works of the masters when learning how to paint? We humans learn by watching and studying others. Our styles are also imprinted upon by those that we have an affinity for. Are we all plagiarizers too?

If the argument is, "they shouldn't have taken the data without the creator's consent", that's a bit more hairy...but even then, it's not any different than what humans do. Can you stop me from studying Van Gogh, or Rembrandt to learn how to paint? Or listening to Jimi Hendrix how to play a guitar? Or imitate the dance moves of Michael Jackson?

These LLMs and Generative AI are doing the same: learning. What makes them dangerous, is that we don't know how they are doing what they are doing, the biases from the data they were trained on, and how realistic what they produce is, to the point that it can affect society (ie, think deep fake news). Jobs have always been under threat by technology. This is just the first time in history that the creatives and knowledge workers, and not just the blue collar types have been affected.

About 4 months ago, a letter and petition was put out to have a moratorium on new LLM training and research. Last I remember, it had over 12k signatories, some of them luminaries in data science, philosophy and physics (one I recall sticking out was Max Tegmark). If you read it, the concern was that these LLM's are showing a lot of emergent behavior that can't really be explained. If any computer scientist tells you "LLM aren't not intelligent", they are full of it. We don't know how our intelligence works, so how can they make the preposterous claim that these LLM's haven't achieved some kind of early AGI (Artificial General Intelligence)?

A hot area of research in Machine Learning is called explainability. Data scientists are scratching their heads how some of these models work. In many ways, data science is a return to good old fashioned empirical science. Just run experiments, observe the results, then try to come up with a hypothesis to explain how what happened, happened. Most science today is, you have a hypothesis, then you come up with an experiment to test it, record the results and compare to your hypothesis. This is the other way around. You start with data, and try to learn what the "rules" are by testing out various statistical configurations (the models or layers in deep learning).

In classic programming: rules + data => answers

In machine learning: data + answers => rules

What machine learning is doing, is figuring out "the rules" for how something works. To simplify it as plagiarism or regurgitation is not what it is doing. It's figuring patterns and relationships, and yes, what is the next most likely word (though much much more complicated than simple Markov Chains). Some of the tasks that GPT-4 have been given are truly amazing to me, and lit a fire under my ass that I needed to learn how this stuff works or I am going to be out of a job in the next 10 years.

The human race does not truly want to know how consciousness is formed. We are too attached to our religious beliefs and hamstrung by our fear of death that we will try to come up with all sorts of reasons why it has to be something special. If we realized that the only difference between us and other life forms was that we created language to allow us to understand abstract concepts, we would look back at ourselves as monsters for all we have done.

I'm curious what the prompts were. I haven't actually found the prompts used to make LLMs reproduce text verbatim. Is it different than asking for a summary or reference? If the text is copied verbatim without some kind of reference in the prompt itself, that would be a problem...but also not entirely surprising. We humans do something similar all the time. We make references to pop-culture, quoting lines from a movie or book to make a point, explain something, or just be funny. Our memories aren't as good as a computer though so usually they are short phrases.They literally regurgitate whole passages of text, while exhibiting problematic biases caused by what they’re fed to “learn” and how, and they get stuff wrong very often in ways that any kid would be able to avoid.

Because they are not learning in the same sense that animals do. They’re just gaining more data and running algorithms using that data.