Three news stories this week came out about algorithmic generation aka "AI Art" in the tabletop gaming industry.

BackerKit announced that effective October 4, no project will be allowed with any writing or art assets that were entirely created by algorithmic generation aka “AI”. From the blog post:

BackerKit announced that effective October 4, no project will be allowed with any writing or art assets that were entirely created by algorithmic generation aka “AI”. From the blog post:

The post includes image examples of what content is and is not allowed. Additionally, BackerKit will add an option to the back end for creators that will allow them to “exclude all content uploaded by our creators for their projects from AI training”. This is opt-out, meaning that by default this ban is in place and creators who want their work used for training generative algorithms must go in and specifically allow it.

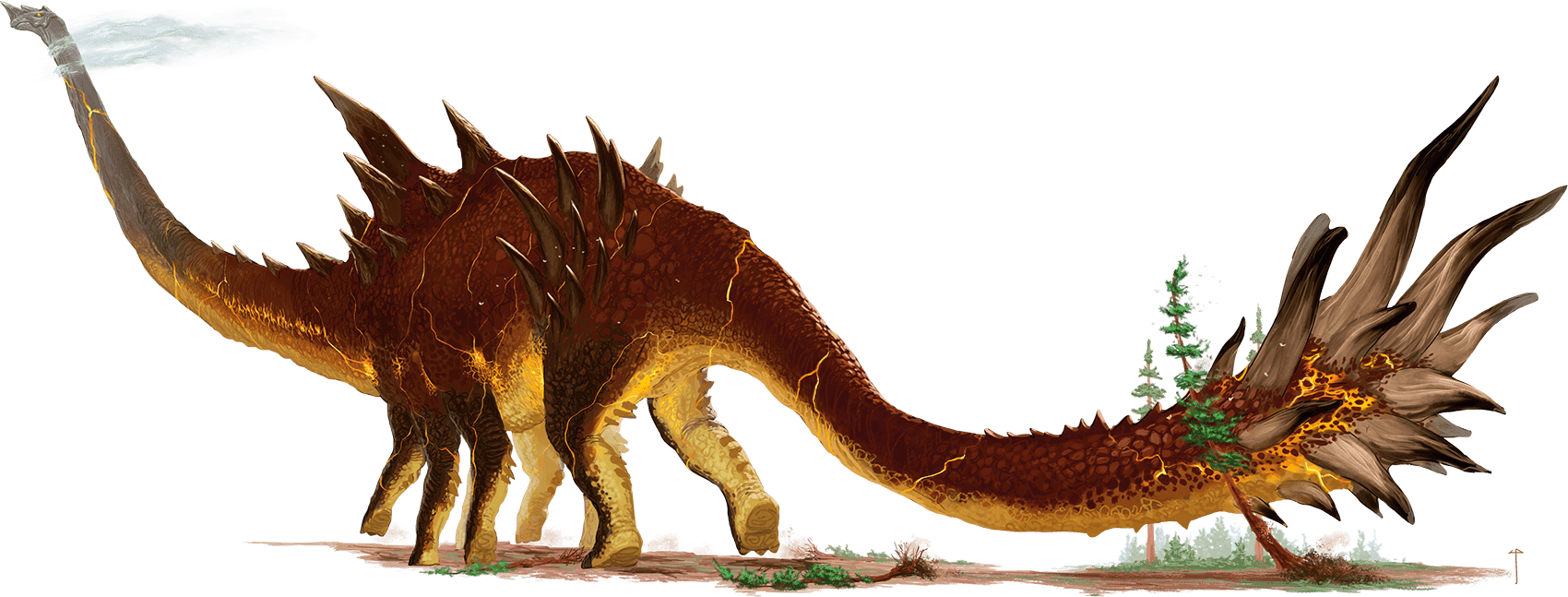

This move comes alongside a pair of recent controversies in tabletop gaming. Last month, Wizards of the Coast came under fire as it was revealed a freelance artist used algorithmic generation for artwork included in Bigby Presents: Glory of the Giants. Wizards of the Coast quickly updated their stance on algorithmic generation with a statement that the artwork would be removed from the D&D Beyond digital copies of the book and will place new language in contracts banning the use of algorithmic generation.

This week, Gizmodo reporter Linda Codega reported that the artwork in the D&D Beyond version of Bigby Presents has now been replaced with new art. No announcement was made about the new artwork, and Gizmodo’s attempts to contact Wizards of the Coast for a statement directed them to the statement made in August. The artist who used algorithmic generation, Ilya Shkipin, has been removed from the art credits from the book, and the artwork has replaced by works by Claudio Prozas, Quintin Gleim, Linda Lithen, Daneen Wilkerson, Daarken, and Suzanne Helmigh.

Meanwhile, the largest tabletop gaming convention in Europe, Essen Spiel, recently ran into the same controversy as promotional material for the convention used algorithmically generated artwork including the convention’s official app, promotional posters, and tickets for the event.

Marz Verlag, the parent company for the convention, responded to a request for comment from Dicebreaker:

Meeps, a board game-playing kitten and totally innocent of the controversy (because who could blame a cute kitty), is the new mascot for the convention announced this past July voted on by fans and was designed by illustrator Michael Menzel.

At BackerKit, our team is passionate about people’s passions. For ten years, we’ve supported creators in their journey to launch projects and build thriving creative practices and businesses. We’ve developed deep relationships and respect for the people who breathe life into crowdfunding projects, and we are committed to defending their well-being on our platform.

That’s why we are announcing a new policy that aims to address growing concerns regarding ownership of content, ethical sourcing of data, and compensation for the process of creating content. […]

As part of this consideration, BackerKit has committed to a policy that restricts the use of AI-generated content in projects on our crowdfunding platform.

This policy goes into effect on October 4, 2023.

[…] This policy emphasizes that projects on BackerKit cannot include content solely generated by AI tools. All content and assets must first be created by humans.

This doesn’t impact content refined with AI-assisted tools like “generative content fill” or “object replacement” (image editing software functions that help blend or replace selected portions of an image), other standard image adjustment tools (saturation, color, resolution,) or AI language tools that refine human-created text with modifications to spelling, grammar, and syntax.

Software assisted by AI, such as transcribers or video tracking technology are permitted under these guidelines. However, software with the purpose to generate content using AI would not be permitted.

The post includes image examples of what content is and is not allowed. Additionally, BackerKit will add an option to the back end for creators that will allow them to “exclude all content uploaded by our creators for their projects from AI training”. This is opt-out, meaning that by default this ban is in place and creators who want their work used for training generative algorithms must go in and specifically allow it.

This move comes alongside a pair of recent controversies in tabletop gaming. Last month, Wizards of the Coast came under fire as it was revealed a freelance artist used algorithmic generation for artwork included in Bigby Presents: Glory of the Giants. Wizards of the Coast quickly updated their stance on algorithmic generation with a statement that the artwork would be removed from the D&D Beyond digital copies of the book and will place new language in contracts banning the use of algorithmic generation.

This week, Gizmodo reporter Linda Codega reported that the artwork in the D&D Beyond version of Bigby Presents has now been replaced with new art. No announcement was made about the new artwork, and Gizmodo’s attempts to contact Wizards of the Coast for a statement directed them to the statement made in August. The artist who used algorithmic generation, Ilya Shkipin, has been removed from the art credits from the book, and the artwork has replaced by works by Claudio Prozas, Quintin Gleim, Linda Lithen, Daneen Wilkerson, Daarken, and Suzanne Helmigh.

Meanwhile, the largest tabletop gaming convention in Europe, Essen Spiel, recently ran into the same controversy as promotional material for the convention used algorithmically generated artwork including the convention’s official app, promotional posters, and tickets for the event.

Marz Verlag, the parent company for the convention, responded to a request for comment from Dicebreaker:

"We are aware of this topic and will evaluate it in detail after the show. Right now please understand that we cannot answer your questions at this moment, as we have a lot to do to get the show started today," said a representative for Merz Verlag.

"Regarding the questions about Meeps and timing, I can tell you quickly that the marketing campaign [containing AI artwork] has been created way before we had the idea to create a mascot. The idea of Meeps had nothing to do with the marketing campaign and vice versa."

Meeps, a board game-playing kitten and totally innocent of the controversy (because who could blame a cute kitty), is the new mascot for the convention announced this past July voted on by fans and was designed by illustrator Michael Menzel.