You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

D&D 5E (2024) Using AI for Your Home Game

- Thread starter NaturalZero

- Start date

cbwjm

Legend

The problem with this argument is that it is either/or. If we have AI that can sort out our laundry and dishes (which is pretty easy anyway with washing machines, dryers, and dishwashers), it doesn't mean we can't have AI to write and do art, she'd still be able to do her own art and writing and others will be able to use AI for both chores and art.

How much energy does it take to generate one image?The massive amount of wasted energy might tip the balance.

Compare whatever you get to the energy I expend in a week riding a bike. I've got a power meter accurate to within +/- 1%. I can output 200W through the pedals in an hour and I'll do that for about 10 hours per week. Humans are 20-25% efficient so that's 8-10 kWh per week just from biking.

Each hour I'm on the bike has to be worth hundreds and hundreds of images generated by AI.

In another thread, I posted the article in nature about the energy question.

www.nature.com

www.nature.com

Here's the relevant comparison for image generation:

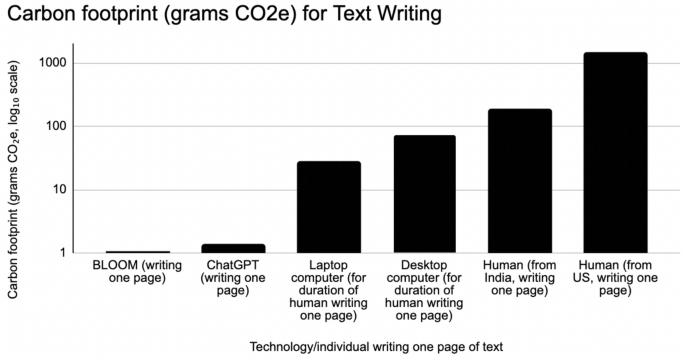

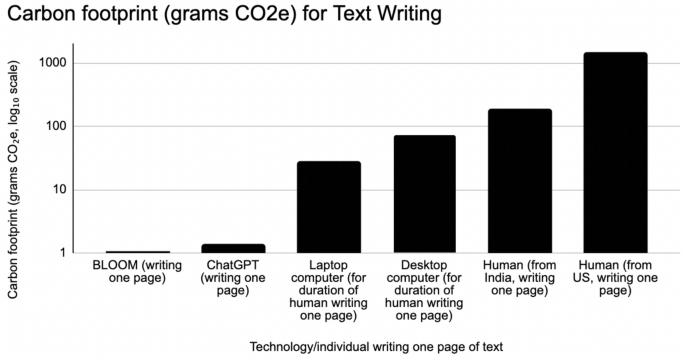

While AI's energy used for creating an image is indeed massive, the energy used to power the computer while the human generates the image with computer programs is tremendously higher.

While the human CO2 emission total isn't something that can really be saved because we must assume that the human would still be living while not generating an image (the human wasn't created for the purpose of generating images), probably producing CO2, the relevant part is the comparison between midjourney generation and computer graphic human generation of a single image.

If we were really concerned about our energy consumption for having image, we should ban human image generators to use laptop or worse desktop and focus on really picking up a brush and do a painting on a canvas. And even then, it's quite possible that the environmental cost of moving the brush, paint and canvas from China to the location of the human and moving the result to the customer (possibly with several back and forth for complex work) would exceed IA image generation's total cost.

Thank god we accept to worry not about energy spending for our hobbies!

Depends on the image, the model and the computer your use. Image size matter, and generating a 4k image will require more energy than a 1k image, obviously. It tends to be proportional. Model complexity will increase the amount of compute needed to output an image, by a lot. For example, a 4090 RTX Nvidia graphic card can output 30 images per seconds using a 0.6-billions parameters model, but it takes 20 seconds to generate one image with a 12-billions parameters model and the same card. Then the energy depends on the efficiency of the processor. A 4090 nvidia cards has a top consumption of 450 W, but more optimized, professional GPU designed for servers can be much more efficient. How long you accept to wait for the result also matter (accepting a drop in performance for energy saving). For example, to run a local state-of-the-art model, you could use a 4090 for 20 seconds and get an image for 2.5 W, or you could get the same image in twice the energy by using an earlier generation card, or for 1.9 W on a H100-equipped server (newer chips are more efficient, newer models are more compute-hungry).

You won't need to ride your bike for long!

The carbon emissions of writing and illustrating are lower for AI than for humans - Scientific Reports

As AI systems proliferate, their greenhouse gas emissions are an increasingly important concern for human societies. In this article, we present a comparative analysis of the carbon emissions associated with AI systems (ChatGPT, BLOOM, DALL-E2, Midjourney) and human individuals performing...

Here's the relevant comparison for image generation:

While AI's energy used for creating an image is indeed massive, the energy used to power the computer while the human generates the image with computer programs is tremendously higher.

While the human CO2 emission total isn't something that can really be saved because we must assume that the human would still be living while not generating an image (the human wasn't created for the purpose of generating images), probably producing CO2, the relevant part is the comparison between midjourney generation and computer graphic human generation of a single image.

If we were really concerned about our energy consumption for having image, we should ban human image generators to use laptop or worse desktop and focus on really picking up a brush and do a painting on a canvas. And even then, it's quite possible that the environmental cost of moving the brush, paint and canvas from China to the location of the human and moving the result to the customer (possibly with several back and forth for complex work) would exceed IA image generation's total cost.

Thank god we accept to worry not about energy spending for our hobbies!

How much energy does it take to generate one image?

Depends on the image, the model and the computer your use. Image size matter, and generating a 4k image will require more energy than a 1k image, obviously. It tends to be proportional. Model complexity will increase the amount of compute needed to output an image, by a lot. For example, a 4090 RTX Nvidia graphic card can output 30 images per seconds using a 0.6-billions parameters model, but it takes 20 seconds to generate one image with a 12-billions parameters model and the same card. Then the energy depends on the efficiency of the processor. A 4090 nvidia cards has a top consumption of 450 W, but more optimized, professional GPU designed for servers can be much more efficient. How long you accept to wait for the result also matter (accepting a drop in performance for energy saving). For example, to run a local state-of-the-art model, you could use a 4090 for 20 seconds and get an image for 2.5 W, or you could get the same image in twice the energy by using an earlier generation card, or for 1.9 W on a H100-equipped server (newer chips are more efficient, newer models are more compute-hungry).

You won't need to ride your bike for long!

Last edited:

“I get the arguments against using AI to replace artists, but AI is great for making character art!”

Literally that’s replacing artists. Art has value, making it takes labor. Pay artists for their labor, learn to do it yourself, or get used to not having art. Otherwise, you are, in fact, taking work from artists.

If artists were willing to do my art for a reasonable price and meet my timeline I would have them do it. Last time I did this though it was like $50 for something that honestly wasn't that great and took like a day and a half to get back to me.

If you know an artist that is willing to work with me and develop character art for under $1 an image and do it on demand in minutes I will use them. Until I can find such an artist, I am going to keep using AI.

Last edited:

This graph is misleading as a visual because it uses a logarithmic scale.

Charlaquin

Goblin Queen (She/Her/Hers)

Right, you undervalue the labor the artist does, and therefore aren’t willing to pay the price they ask for it. Which means you don’t get the product. Thats how this works, just like how if you aren’t willing to pay the price a restaurant is asking for a burger, you either have to make your own or accept not getting to eat a burger.If artists were willing to do my art for a reasonable price and meet my timeline I would have them do it.

$50 for a day’s work is like $6.25 an hour. For skilled labor! That artist is selling their labor at a significant discount in order to keep competitive in the market, and to you that comes across as an outrageous cost.Last time I did this though it was like $50 for something that honestly wasn't that great and took like a day and a half to get back to me.

Right, so you are unwilling to compensate real humans fairly for the value of their work, and instead are going to use a machine that steals their labor and spits out soulless imitations.If you know an artist that is willing to work with me and develop character art for under $1 an image and do it on demand in minutes I will use them. Until I can find such an artist, I am going to keep using AI.

Vaalingrade

Legend

So... you're not willing to pay people a fair price for their labor or have any sort fo reasonable expectations on quality to timeline?If you know an artist that is willing to work with me and develop character art for under $1 an image and do it on demand in minutes I will use them. Until I can find such an artist, I am going to keep using AI.

Somewhere a tech bro just got his mewing profile pic.

Similar Threads

- Locked

- Replies

- 231

- Views

- 45K

- Replies

- 7

- Views

- 5K

- Replies

- 5

- Views

- 3K

D&D 5E (2024)

2024 and 2014 spellbook builder

- Replies

- 17

- Views

- 4K

- Replies

- 43

- Views

- 10K

Recent & Upcoming Releases

-

June 18 2026 -

October 1 2026