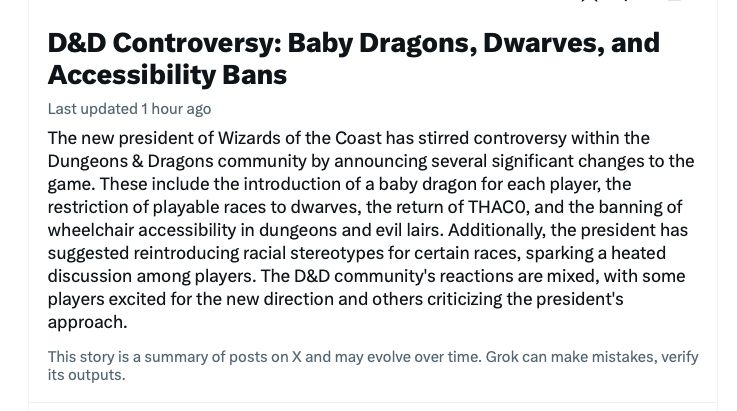

I guess I don’t have to worry about my job going away quite yet. This is what Twitter’s AI thingy thinks is currently happening in the industry I work in.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Twitter thinks there's a new WotC president who will give you a baby dragon.

Morrus is the owner of EN World and EN Publishing, creator of the ENnies, creator of the What's OLD is NEW (WOIN), Simply6, and Awfully Cheerful Engine game systems, publisher of Level Up: Advanced 5th Edition, and co-host of the weekly Morrus' Unofficial Tabletop RPG Talk podcast. He has been a game publisher and RPG community administrator for over 20 years, and has been reporting on TTRPG and D&D news for over two decades.

I believe human creative thought consists mostly of the following:I think it suggests that human intelligence as expressed through the written word is very predictable. Indeed I think we all have felt the treadmill of people rehashing old ideas over and over again with the same phrases and word patterns. Now, I'm not saying that novel ideas never arise from human intelligence, but I'm open to that idea. Sometimes new ideas arise from combining old ideas, but sometimes they come from novel observations of the world.

a) Spotting connections between seemingly unrelated things.

b) Discerning previously undetected patterns.

c) Testing these connections and patterns to determine which are valid and useful, and which are spurious or dead ends.

d) Hooking up those insights to existing knowledge to produce something of functional and/or aesthetic value.

In addition, there is an ancillary skill:

e) Communicating your insights in a way that other people can understand.

That last item is not strictly required for creative thought, but sure is helpful if you want your work to have any real impact, or to make you any money.

Neural networks in general have made substantial progress on a) and b), and LLMs have done astonishing work on e). The big missing pieces right now are c) and d). These rely on a kind of broad holistic understanding that humans develop through decades of living in the world and doing stuff, which is extremely hard to reproduce using today's machine learning techniques.

It's worth noting also that c) and d) are the parts routinely overlooked by people who are enamored with the idea of being Creative! Thinkers! without having to actually, you know, do anything hard. I suspect there is a connection here, but I haven't yet decided if it's valid and useful, and I certainly haven't found any functional or aesthetic value in it except a vague sense of smug superiority.

Last edited:

Ancalagon

Dusty Dragon

on the AI is hype: the stock market is starting to catch on

https://www.ctvnews.ca/business/has-the-ai-bubble-burst-wall-street-wonders-if-artificial-intelligence-will-ever-make-money-1.6987513

https://www.ctvnews.ca/business/has-the-ai-bubble-burst-wall-street-wonders-if-artificial-intelligence-will-ever-make-money-1.6987513

Vaalingrade

Legend

On the even brighter side, there's going to be a couple huge companies who are going to be legally required to maintain hydroelectric, nuclear and wind power plants for decades due to this boondoggle.on the AI is hype: the stock market is starting to catch on

https://www.ctvnews.ca/business/has-the-ai-bubble-burst-wall-street-wonders-if-artificial-intelligence-will-ever-make-money-1.6987513

Similar Threads

- Replies

- 195

- Views

- 21K

- Replies

- 42

- Views

- 3K

- Locked

- Replies

- 231

- Views

- 44K

- Locked

- Replies

- 505

- Views

- 90K

- Replies

- 5

- Views

- 3K

Recent & Upcoming Releases

-

June 18 2026 -

October 1 2026

Related Articles

-

D&D General Hasbro CEO Says AI Integration Has Been "A Clear Success"

- Started by Morrus

- Replies: 195

-

Hasbro CEO Chris Cocks Is Talking About AI in D&D Again

- Started by Christian Hoffer

- Replies: 231

-

Hasbro CEO Chris Cocks Talks AI Usage in D&D [UPDATED!]

- Started by Christian Hoffer

- Replies: 505

-

D&D General Using Notebook LM to Organize Your Campaign

- Started by Clint_L

- Replies: 5

Recent & Upcoming Releases

-

June 18 2026 -

October 1 2026