Ovinomancer

No flips for you!

Actually, the graph should look like this:Ok, since you continue to be hung up on the fact that my graph ends before the 3d6 curve gets to the top and bottom, here:

https://*****.com/mwqCNy7.jpg

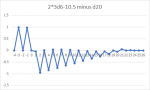

And for good measure, here's a graph of the differences in success probabilities at each adjusted DC (think of the x-axis of all of these graphs as the DC of the check minus the modifier).

https://*****.com/n4ZIKPk.jpg

So, across the range of adjusted DCs, the two methods yield success probabilities within 4.5% of each other; essentially, depending on the DC, switching from one to the other will give some characters the equivalent of somewhere between a -1 and +1.

And that's because you're graphing physical things - the 2*3d6 data DOES NOT EXIST except at certain points. I graphed the -11 vice your correction for simplicity and to avoid explaining how your correction causes this graph vs the -10 graph to exist half of the time resulting in a bit of a Schrodinger's graph. It's all bad assumptions.

You've still tossed half of the d20 data if you compare where the 2*3d6 curve actually exists. The 2*3d6 curve DOES NOT EXIST at half the data points you're comparing. It creates discrete data points spaced 2 apart. You can't use a model of a physical event non-physically and get coherent answers.Now hopefully we can agree that I haven't tossed any data, as I'm showing the full range of possibilities.

Yes, it DOES NOT EXIST, yet you're using it as part of your comparison.It exists as a target, not as a possible roll. If you have a 25 AC and are facing a monster with a +3 to hit, then the adjusted DC of their roll is 22. RAW they auto-hit you on a 20, but omitting that, they need a 22 to hit you, which means they can't.

This is a game that often hinges on a 5% difference and you're willing to cavalierly ignore the impact of 3% (and it's larger than that) just because you did mathemagic and can't acknowledge that's it's flawed. This is, of course, ignoring the parts where it's up to 95% different.Yes. I'm comparing 0% to roughly 3% and saying those are close. You can object for gameplay reasons (essentially, using this approximation makes some tasks that would be very easy instead automatic, and some that should be very difficult impossible; or vice versa, depending on which you're treating as the reference method and which the approximation method). But it's not a mathematical error.

I bolded the problem in your thinking I've been trying to point out. If you round down when halving, then rolling a 2 is the same as rolling a 3, rolling a 4 is the same as rolling a 5, etc, etc. You've tossed half of your unique rolls using this method because you're ended up at the same result for comparison to rolls you aren't tossing on a 3d6.Yes, if you roll a 1, and apply 10 + (roll-10)/2 (rounding down when halving), you get 5. And if you roll a 20, and apply 10 + (roll-10)/2, you get 15. This isn't some purely theoretical exercise. You could, in principle, do that math with your rolls at the table. That's not what @NotAYakk was actually suggesting, but adjusting the die rolls like that is mathematically equivalent to doubling your bonus and doubling the DCs' distance from 10.

And, yes, @NotAYakk had some small concessions that made their changes to modifiers make those fractions occasionally count (they didn't double attribute bonuses outright, and random die could still produce odd results), but quite a number of modifiers fit the straight doubling model that results in losing half the numbers on the d20 due to rounding. And the graphs certainly lose the data.

I haven't ignored anything. I've been entirely up front all along (as was the OP) about what happens with extreme DCs. The approximation is still good at those extremes as measured by differences in probability. You might not consider approximating 3% with 0% or vice versa to be a good approximation, and that's fine. That's a matter of gaming priorities, not math.

You're losing 10% of the possible results, probability-wise. Not 3%. Focusing on a single delta as if it stands in for the total fidelity loss is not kosher.

Except half the unique die rolls, again.You keep saying this but I haven't tossed out anything.

They are not. I provided an example earlier, a very slightly modified version of the OP example, that resulted in an infinite difference because it was still possible on 3d6 but wasn't possible at all on the modified d20 scale. I also showed how moving in the other direction (and following the maths as presented in the OP) we encountered ~10% deltas between outcomes. This is because you're moving up and down the probabilities at twice the rate -- it's not one for one from a giving comparison start point. This is due to the scaling invalidating half of the die rolls possible by treating them functionally the same.Right, because nobody is saying that 3d6 produces similar rolls to rescaled d20 (or vice versa). We are saying that if you use a suitable rescaling that (approximately) equalizes the variance of the two distributions, then the success probabilities are close, for any DC you want to set.

Absolutely, I can do that. What I can't do is do that and get a result of 11. That's impossible to do by rolling 3d6, doubling the amount rolled, and subtracting 10. So, a model that does that an includes 11 in it's comparison is unphysical.There's nothing unphysical about any of this. It's all something you could do in your game. Either (1) roll 3d6 to resolve checks, double the result, and subtract 10. If the result ties the DC, confirm success with a d2; or (2) roll 1d20 to resolve checks, as written. The claim is that these produce very similar success probabilities, regardless of the DC.

Sure, I can use your method, but by doing so I can only get half of the results you're saying correlate to the full range of the other method. That's what's unphysical. You aren't actually fully understanding the data you're creating because the program you're using draws a line between the data points, and you've confused that line for data.Alternatively, if you want luck to play less of a role in your game, you can either (1) roll 3d6 to resolve checks, confirming ties with a d2; or (2) roll 1d20, halve the distance from 10 and then add 10; or (3) double all bonuses and stretch DCs to be DC' = 10 + 2*(DC - 10). (2) and (3) are exactly identical; (1) is very close, at all DCs.

Any of these are things you could actually do; they're not impractical thought experiments.