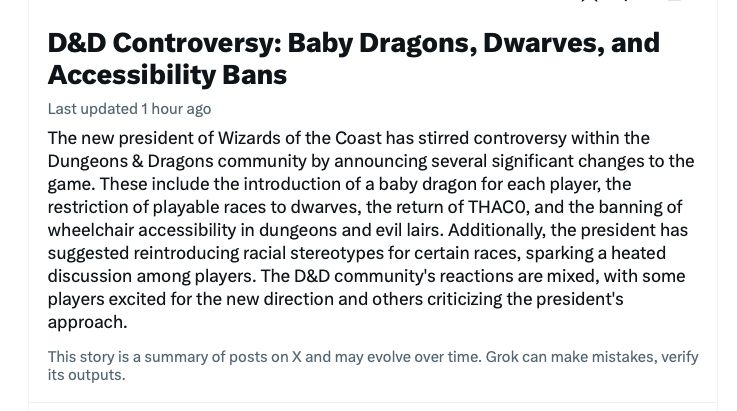

I guess I don’t have to worry about my job going away quite yet. This is what Twitter’s AI thingy thinks is currently happening in the industry I work in.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Twitter thinks there's a new WotC president who will give you a baby dragon.

Morrus is the owner of EN World and EN Publishing, creator of the ENnies, creator of the What's OLD is NEW (WOIN), Simply6, and Awfully Cheerful Engine game systems, publisher of Level Up: Advanced 5th Edition, and co-host of the weekly Morrus' Unofficial Tabletop RPG Talk podcast. He has been a game publisher and RPG community administrator for over 20 years, and has been reporting on TTRPG and D&D news for over two decades.

EzekielRaiden

Follower of the Way

Good enough is sometimes all we have. Solutions to the three-body problem, for example, are always "good enough" because, to the best of our knowledge, a general analytic solution is truly impossible. "Good enough" solutions got us to the Moon, got Cassini to Saturn, and a bunch of other stuff. "Good enough" specifically using "AI" may even help us solve the problem of fusion power (a recent German stellarator startup is using machine learning techniques to solve the problem of particles getting stuck in non-full-circuit orbits around the stellarator's internal magnetic field.)It also illustrates how humans who keep pushing AI think that something that's "close enough" be it trending vs factual, super fast predictive text v writing and image scraping/mashing vs art is equal to "good enough."

The irrationally exuberant boosters (or questionably-moral hucksters) will try to shove "good enough" where it doesn't belong. This has always been true of every technology since at least the days when we invented writing.

I don't think PR spin is what makes it funny. That would seem to be putting the cart before the horse--PR spin would be exploiting the humor that they hope is already present.I assume it's PR spin until it is less wrong all the time.

Joshua Randall

Legend

Sorry it was an obscure crowdfunding campaign and they ran out of baby dragons during fulfillment.Ah, but are the players getting baby dragons? Because if they are, did you get them off Amazon and can you share the link?

dave2008

Legend

Because it can't. It is physically incapable of doing so, and unless a radical new development occurs, this will not, cannot change.

According to my son (who is graduating with his undergrad & masters from Stanford in CS), AI is pretty close to its current limits because we don't currently fully understand how / why it does what it does. Until we humans get a better understanding of AI, we don't have much room to improvement the technology.

EDIT: Just want to clarify, there are still many untapped uses for AI as we currently understanding. That is still a growing field.

EzekielRaiden

Follower of the Way

Yeah, I've heard some similar things. We're also running out of high-quality "natural" training data, which means we may even start growing a whole additional layer of "we don't know what's going on" by training one AI to generate high-quality fictitious data so that a second AI can use it as seed data for whatever the user actually wants to see happen. Personally, I'm real skeptical that such "synthetic" data can achieve anywhere near the same results as real-world data.According to my son (who is graduating with his undergrad & masters from Stanford in CS), AI is pretty close to its current limits because we don't currently fully understand how / why it does what it does. Until we humans get a better understanding of AI, we don't have much room to improvement the technology.

EDIT: Just want to clarify, there are still many untapped uses for AI as we currently understanding. That is still a growing field.

The field has had near-exponential growth, but there are major limitations already coming up hard and fast. Scalability remains a major bottleneck, even with improvements on things like subword tokenization.

Clint_L

Legend

News stories like this are entertaining, but I think folks are too prone to read them as "a-ha, AI is nowhere near as impressive as the media suggests." Where the reality is the opposite: sexy and entertaining stories about AI get media coverage, but the actual application of AI has already radically transformed all kinds of businesses and jobs, and the pace of transformation is accelerating.

J-H

Hero

I tried looking up some info about septic tanks backing up during high rain events today. The first two articles that came up were either AI-generated or written by someone whose first language is not English. The tip-offs are really odd, awkward phrasing, a high degree of repetition, and entire paragraphs of fluffy filler that convey no information beyond "don't worry and it's important to review all the options carefully before making your decision and there are multiple potential causes."

The internet is going downhill. (OK, yes, it's been doing that since about 2007 and the advent of Web 2.0).

The internet is going downhill. (OK, yes, it's been doing that since about 2007 and the advent of Web 2.0).

smiteworks

Adventurer

To be fair, it is not too far off from journalism we see splattered across the web. Clickbait titles that don’t match the actual story, no verification of facts, and little attempt made to determine the true story are probably more common than true journalism.

What would be nice is if AI could build up a list of trusted sources, or research and find those people for an industry and then conduct follow up fact checking with these people. That is time-consuming work human “journalists” rarely do anymore. The article could then quote and list their sources. If a source wished to be anonymous, it would need to verify with a minimum consensus or label those facts as potentially untrustworthy.

Real, old-school journalists used to do this all the time. ENWorld does this. Many do not.

What would be nice is if AI could build up a list of trusted sources, or research and find those people for an industry and then conduct follow up fact checking with these people. That is time-consuming work human “journalists” rarely do anymore. The article could then quote and list their sources. If a source wished to be anonymous, it would need to verify with a minimum consensus or label those facts as potentially untrustworthy.

Real, old-school journalists used to do this all the time. ENWorld does this. Many do not.

Not quite: the semantic content of I am sitting at a desk as I type this does not change whether the sentence is true or false. (This was an issue that Bertrand Russell wrote a lot about, around 120 to 110 years ago.)Whether or not something is factually true is part of semantic content. The meaning of the statement.

But it is generally accepted that truth depends on semantic content.

EzekielRaiden

Follower of the Way

Alright. The actual truth value depends on content the AI cannot, even in principle, see or process.Not quite: the semantic content of I am sitting at a desk as I type this does not change whether the sentence is true or false. (This was an issue that Bertrand Russell wrote a lot about, around 120 to 110 years ago.)

But it is generally accepted that truth depends on semantic content.

"AIs" encode only what they are exposed to in their neural network. If they are exposed to a falsehood, they will regurgitate falsehoods. These are distinct from what are called "hallucinations," where the AI is confidently wrong about something more or less by accident (AIUI, because it prioritizes good grammar above any other concern). Apparently, there are differences in code processing between hallucinations and (for lack of a better term) "sincere" responses, which means it is possible to train a module to detect these differences. However, even with such efforts, (a) "hallucinations" don't go away, they just become harder to identify, and (b) unknowing but "sincere" falsehoods cannot even in principle be detected this way.

So we still have the enormous problem of correcting AIs being confidently incorrect, we've just cut down on one of the most egregious forms of it. Unless and until we can actually get a true AI, something that can process both syntactic and semantic content, we will continue to have issues. As one science YouTuber puts it, until we can get an AI that can do things like correctly explaining the Bell inequalities (or other similarly difficult, but generally understood, scientific/mathematical concepts), the advocates tend to look more than a little rosy about the future prospects of LLMs and machine learning. They are useful tools. They can help us solve, or at least approximately solve, real and difficult problems. But AGI these are not, especially as we are already hitting the limits of current computing technology in terms of scalability (unless, as noted, major new developments occur to enhance this.)

Similar Threads

- Replies

- 237

- Views

- 32K

- Replies

- 42

- Views

- 4K

- Locked

- Replies

- 231

- Views

- 45K

- Locked

- Replies

- 505

- Views

- 91K

- Replies

- 5

- Views

- 3K

Recent & Upcoming Releases

-

October 1 2026 -

October 6 2026 -

January 1 2027

Related Articles

-

D&D General Hasbro CEO Says AI Integration Has Been "A Clear Success"

- Started by Morrus

- Replies: 237

-

Hasbro CEO Chris Cocks Is Talking About AI in D&D Again

- Started by Christian Hoffer

- Replies: 231

-

Hasbro CEO Chris Cocks Talks AI Usage in D&D [UPDATED!]

- Started by Christian Hoffer

- Replies: 505

-

D&D General Using Notebook LM to Organize Your Campaign

- Started by Clint_L

- Replies: 5

Recent & Upcoming Releases

-

October 1 2026 -

October 6 2026 -

January 1 2027