You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

SkyNet really is ... here?

- Thread starter Theory of Games

- Start date

trappedslider

Legend

They aren't supposed to be or at least this one isn't marked as suchAre (-) threads a thing now?

So just finishing up a little project where I had an AI generate a python script to create dozens of iterations of a boardgame map I am considering, as well as perform a regression analysis of all of the cards in the game to see if there are any power outliers. And...it did pretty darn well. Only took me an hour of work. I know some coding, and I know just a smidge of python....but there it is.

People that don't think this technology is transformative are kidding themselves. I am waiting for the next wave when tv starts dying out because people can just make their own shows and movies. Kids a few years from now won't go to the movies or watch tv...they will just have the AI make up something cool...and if they don't like it they can edit it on the fly. The best of the bunch will trickle up and be shared by all. Complete disruptor to the entertainment industry.

So how is AI going to screw us? There are a number of possible ways:

1) Ignorant Destruction: If the AI goes full skynet, honestly it probably won't be malicious in a "I must kill all the humans" kind of way. More likely an AI will be given some task, and it will do it....not realizing that the task will also involve a mass extinction event on earth.....oops.

A big part of this is that prompting an AI is still as much mysticism as it is science. Just like the old days of superstition, you find something that works and you just go with it, but truly breaking down that sentence A made the AI do task X is still very much a grey area. And so people are going to write bad prompts, and as the tool gets stronger, at some point its going to do something bad on a massive scale.

2) Massive world wide hack: While AI can put some code together, one thing it is TERRIBLE at is secure code. like god awful. And so its only a matter of time before enough sites have some massive vulnerabilities that hackers (probably powered by AI themselves) will wrecking ball.

3) Irrelevance of Humanity: Much more likely than the AI killing us, is it simply replaces us. Of course as AI becomes posed to take human jobs, the normal call of "but new jobs will be created to replace them".

I don't believe that. Will there be new jobs created....of course there will. And humans might work on them....for a litle bit. But then an AI will be trained to do that job and its off to the races.

When machines replaced our physical labor, we shifted to mental tasks for the predominance of our economies. But now AI is posed to replace the mental labor. Every job you can think of is a combination of physical and mental labor....and if the machines become better at both there is no job you will create that humans would be better at than machines....and certainly not on the level needed to support every with work.

And maybe you get a Universal Basic Income going, but what are humans going to do? They don't need to work....and likely the machines will get better at creative tasks. So its the Wallie scenario, humans just become fat lazy slobs that sit in chairs drink soda and just hang out all day.

4) The Will to Power

Technological transformations can often shift and concentrate power in new sources. It is possible that a new Alexander or Ghengis Khan will arise, powered by AI and robotics to unless a new power on the world....once again enslaving mankind to a dictator. While that could be a Skynet AI....it could just as likely be a human who saw the potential and seized the opportunity.

So yeah lots of ways this can end badly for humanity.

People that don't think this technology is transformative are kidding themselves. I am waiting for the next wave when tv starts dying out because people can just make their own shows and movies. Kids a few years from now won't go to the movies or watch tv...they will just have the AI make up something cool...and if they don't like it they can edit it on the fly. The best of the bunch will trickle up and be shared by all. Complete disruptor to the entertainment industry.

So how is AI going to screw us? There are a number of possible ways:

1) Ignorant Destruction: If the AI goes full skynet, honestly it probably won't be malicious in a "I must kill all the humans" kind of way. More likely an AI will be given some task, and it will do it....not realizing that the task will also involve a mass extinction event on earth.....oops.

A big part of this is that prompting an AI is still as much mysticism as it is science. Just like the old days of superstition, you find something that works and you just go with it, but truly breaking down that sentence A made the AI do task X is still very much a grey area. And so people are going to write bad prompts, and as the tool gets stronger, at some point its going to do something bad on a massive scale.

2) Massive world wide hack: While AI can put some code together, one thing it is TERRIBLE at is secure code. like god awful. And so its only a matter of time before enough sites have some massive vulnerabilities that hackers (probably powered by AI themselves) will wrecking ball.

3) Irrelevance of Humanity: Much more likely than the AI killing us, is it simply replaces us. Of course as AI becomes posed to take human jobs, the normal call of "but new jobs will be created to replace them".

I don't believe that. Will there be new jobs created....of course there will. And humans might work on them....for a litle bit. But then an AI will be trained to do that job and its off to the races.

When machines replaced our physical labor, we shifted to mental tasks for the predominance of our economies. But now AI is posed to replace the mental labor. Every job you can think of is a combination of physical and mental labor....and if the machines become better at both there is no job you will create that humans would be better at than machines....and certainly not on the level needed to support every with work.

And maybe you get a Universal Basic Income going, but what are humans going to do? They don't need to work....and likely the machines will get better at creative tasks. So its the Wallie scenario, humans just become fat lazy slobs that sit in chairs drink soda and just hang out all day.

4) The Will to Power

Technological transformations can often shift and concentrate power in new sources. It is possible that a new Alexander or Ghengis Khan will arise, powered by AI and robotics to unless a new power on the world....once again enslaving mankind to a dictator. While that could be a Skynet AI....it could just as likely be a human who saw the potential and seized the opportunity.

So yeah lots of ways this can end badly for humanity.

I don't see how AI could become more dangerous than humans. And new humans are being created everyday in complete and utter lack of governmental control.

"It can give bad medical advice" so can a faith healer with a much worse track record.

"It can hack emails when given access to them" so can hackers (and they hack emails they aren't given access to).

"It can drive our car into another car or an innocent pedestrian", like your average drunken driver.

"It could decide to kill people using weapons" hey, Cain patented that after a chat with Abel...

"It could genocide us without empathy", emulating Nazis (and many others, they don't have a monopoly).

I fail to imagine a realistic scenario (ie, not the Paperclip Apocalypse) where AI would be more harmful than a human.

"It can give bad medical advice" so can a faith healer with a much worse track record.

"It can hack emails when given access to them" so can hackers (and they hack emails they aren't given access to).

"It can drive our car into another car or an innocent pedestrian", like your average drunken driver.

"It could decide to kill people using weapons" hey, Cain patented that after a chat with Abel...

"It could genocide us without empathy", emulating Nazis (and many others, they don't have a monopoly).

I fail to imagine a realistic scenario (ie, not the Paperclip Apocalypse) where AI would be more harmful than a human.

Last edited:

kermit4karate

A strong opinion is still only an opinion.

I use different AI models daily both personally and professionally and see them as revolutionary, now with the advent of LLMs. Also see the weaknesses.I am a big fan of AI, but there are limits to how long these things can run autonomously and succeed at complex tasks and it is not very high. There is a fun project, "Claude plays Pokemon", that lets the AI try to beat those games. The last I checked the furthest progress it had gotten was 3 gyms, which is pretty impressive for a computer but not so much for a human.

On the other hand, AI are very strong--stronger than most humans--at specific, narrowly defined reasoning tasks. But they require supervision or at least careful prompting and need to be monitored constantly.

The thing for me isn't at all what it can do today, but what it will be able to do 5 years from now.

I've worked in IT for 30 years, and I don't even think the Internet itself was as disruptive as AI will be over the next 30. I honestly fear for children growing up today.

First stages? Amazing things like cures for diseases, higher crop yields, cold fusion. Later stages? Massive global unemployment.

First stages? Amazing things like cures for diseases, higher crop yields, cold fusion. Later stages? Massive global unemployment.

People need to be employed because we've collectively decided that wealth would be shared in exchange of work. There is no reason to keep doing that once work can be entirely outsourced to machine.

The situation you describe might pose the question of what will humans do? (and we've numerous example of people living happily as retirees without having to work, and societies were the workweek was much lower and were there is no societal collapse, since doing things will keep being available, except that no one will be forced to do thing or starve) and how will we decide to share the wealth. If we decide that all the wealth should go to the capital holder of AI companies, we might get a Hunger Games society. If we decide that wealth should be taxed for the public good, we might get Starfleet. The choice will be ours, as it was over the 20th century. No two (democratic) countries adopted the same path to share the wealth created by the technological progress since the end of WWII.

How has income inequality within countries evolved over the past century?

While the steep rise of inequality in the United States is well-known, long-run data on the incomes of the richest shows countries have followed a variety of trajectories.

Compounded with the redistributive effect of taxation and transfers:

(Income redistribution through taxes and transfers across OECD countries)

Several scenarios of public choices unfolded. There is no reason to expect that only the worst one (pro-capital accumulation policies PLUS low or inexistant reduction of inequalities through public policies) will be chosen.

I concur with you on the possible path, but I don't think the ultimate outcome would be negative and therefore not a doom for humanity.

Last edited:

Theory of Games

Storied Gamist

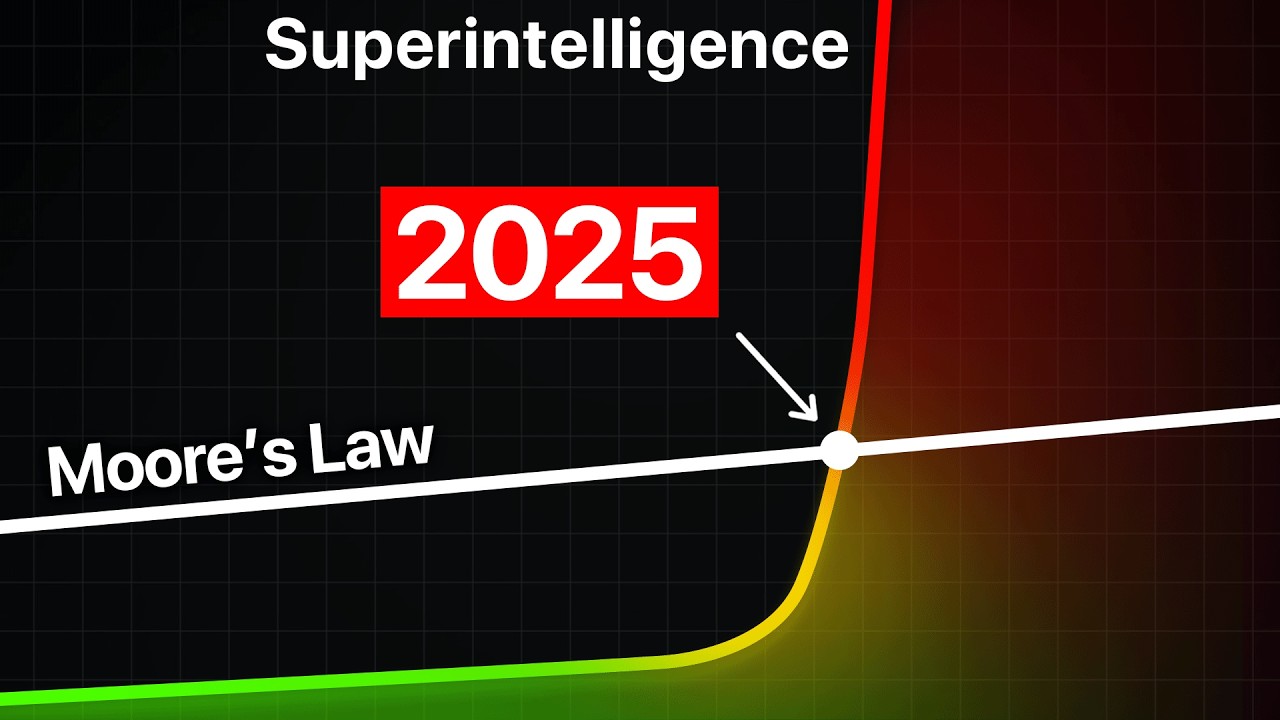

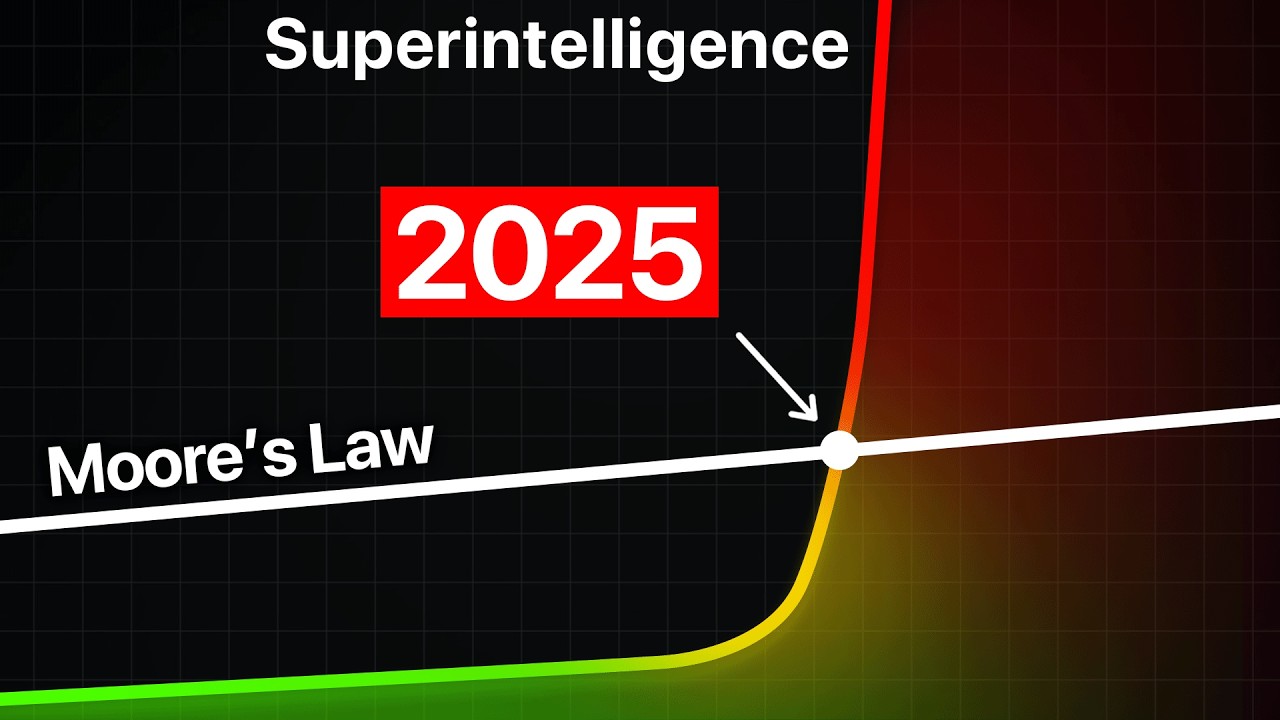

And your last sentence sums up the issue: as Super-Intelligence emerges, the technology outpaces our ability to track exactly what AI can do and how fast it can do it. Already we've learned that the best AI can learn at least 10,000 times faster than humans can:I don't see how AI could become more dangerous than humans. And new humans are being created everyday in complete and utter lack of governmental control.

"It can give bad medical advice" so can a faith healer with a much worse track record.

"It can hack emails when given access to them" so can hackers (and they hack emails they aren't given access to).

"It can drive our car into another car or an innocent pedestrian", like your average drunken driver.

"It could decide to kill people using weapons" hey, Cain patented that after a chat with Abel...

"It could genocide us without empathy", emulating Nazis (and many others, they don't have a monopoly).

I fail to imagine a realistic scenario (ie, not the Paperclip Apocalypse) where AI would be more harmful than a human.

It Begins: AI Is Now Improving Itself

Detailed sources: https://docs.google.com/document/d/1ksVvFuR0IttxzH6zoASSYy7ZhTDqif42IFXp25ITVKU/edit?tab=t.9rb62ckaanowBased on the report: Situational Awa...

Our inability to keep pace with the technology is a very serious problem. Humanity has always created tools, but we've never created something that can literally act without our guidance AND outperform us intellectually. The fact that the best minds in the field are sounding an alarm is ... not to be ignored IMO.

The problem they are sounding the alarm and still going ahead. This makes me suspicious, if they were really alarmed why not stop?And your last sentence sums up the issue: as Super-Intelligence emerges, the technology outpaces our ability to track exactly what AI can do and how fast it can do it. Already we've learned that the best AI can learn at least 10,000 times faster than humans can:

It Begins: AI Is Now Improving Itself

Detailed sources: https://docs.google.com/document/d/1ksVvFuR0IttxzH6zoASSYy7ZhTDqif42IFXp25ITVKU/edit?tab=t.9rb62ckaanowBased on the report: Situational Awa...www.youtube.com

Our inability to keep pace with the technology is a very serious problem. Humanity has always created tools, but we've never created something that can literally act without our guidance AND outperform us intellectually. The fact that the best minds in the field are sounding an alarm is ... not to be ignored IMO.

Theory of Games

Storied Gamist

Greed. Hubris. As I posted above and many of the videos I've presented explain, a big factor in this is human weakness - our reckless desire to "win" despite what that "win" may cost us. Many of the top tech companies involved in this AI "arms race" simply aren't interested in the risk to Humanity because the wealth/fame they can earn is blinding them. And it wouldn't be the first time this kind of thing has happened, of course.The problem they are sounding the alarm and still going ahead. This makes me suspicious, if they were really alarmed why not stop?

My hope - along with others who understand the threat here - is that we take steps to contain the technology and perhaps eliminate the danger.

kermit4karate

A strong opinion is still only an opinion.

The "they" are different people. Whole lots of different people with lots of different motives and agendas.The problem they are sounding the alarm and still going ahead. This makes me suspicious, if they were really alarmed why not stop?

If it had been up to me (haha!), I would have used my celestial powers to wipe the minds of the first individuals who conceived of an LLM.

BAM! Problem solved. Just gotta stay on top of it.

Similar Threads

- Replies

- 10

- Views

- 1K

- Replies

- 3

- Views

- 1K

- Replies

- 75

- Views

- 11K

- Replies

- 75

- Views

- 11K

Recent & Upcoming Releases

-

June 18 2026 -

October 1 2026