Chaosmancer

Legend

Fair enough, neither am I, I will base the following entirely on

How different weighting methods work

Historically, public opinion surveys have relied on the ability to adjust their datasets using a core set of demographics – sex, age, race and ethnicity,www.pewresearch.org

so let's see where we agree and disagree

First, what this method is intend to do is to reduce the bias in a self-selecting poll (like WotC's). The bias it tries to adjust for is introduced by the people answering the poll not being an exact proportional match for the entire population, and different groups within the population holding different opinions to varying degrees.

Second, it attempts this by determining the deviation of the people answering the poll from the overall population and then adjusting the poll results towards what is known about the overall population (from well established factors that are known for the entire population, e.g. from government surveys), based on demographic factors such as sex, age, race and ethnicity, educational attainment, and geographic region.

Feel free to disagree and say where I misunderstand this.

Okay

Based on this, I'd say it cannot be applied by WotC. For one they do not ask for enough demographic factors for this to be feasible, and second there are no established facts that are known for the entire population (by such demographic factors) that would be of any use here.

Disagree. They have asked for plenty of demographic information. And they have plenty of established facts known about the entire population. It is not all asked for in this precise survey, but they have been surveying the community for a decade, asking these questions, and utilizing the market research of Hasbro and other data points for the community. DnD Beyond uses Google to sign in, that probably gives them access to Google's research on the DnD Beyond community. They have the metrics of their Youtube channel, which gives them quite a lot of demographic information as well.

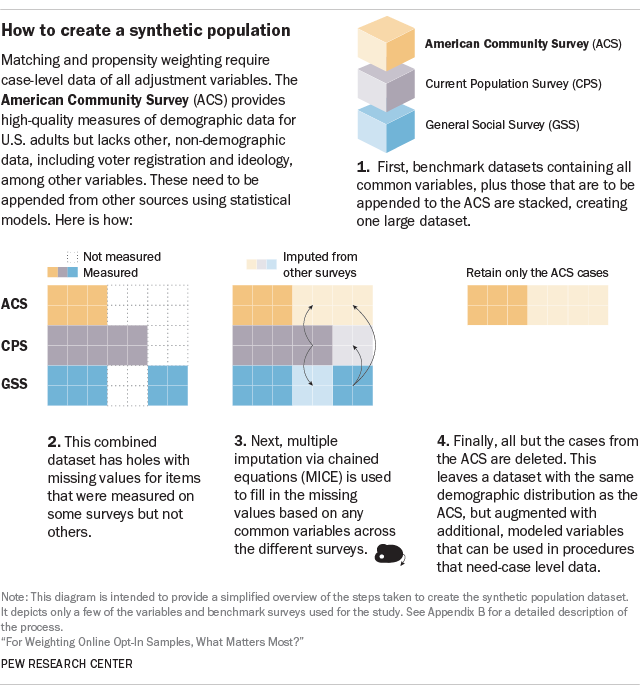

Just because it is not in this single survey does not mean they do not have a large amount of data on the community. Hence the mention of the synthetic population model to COMBINE and REFERENCE their population information from multiple resources.

The paper then goes on to discuss that this approach is not really working reliably in the first place ("But are they sufficient for reducing selection bias in online opt-in surveys? Two studies that compared weighted and unweighted estimates from online opt-in samples found that in many instances, demographic weighting only minimally reduced bias, and in some cases actually made bias worse."), but that is moot here, as this is not a workable approach for WotC's survey to begin with, for the reasons I gave above.

Which is why you don't use just one method.

I grant you this, so we can move on from it, it is not relevant

I don't need you to grant me my own statements. I need you to stop making up these strawmen to try and discredit me.

I don't think I disagreed with this point, in that they are using that survey method. That does not address any of the issues with their survey that I brought up however. You can use a widely used methodology wrong too....

Really, you don't think you disagreed with it? What was this statement then? "and make unfounded claims that WotC somehow is infallible." Just a slip of the keyboard for the fifth time?

And yes, you CAN use a widely used methodology incorrectly... mind proving that they've actually done that? Instead of using it exactly as it can be used?

This does not address any of the issues I raised, it just explains what I already know, i.e. what WotC is doing

If you understand what they are doing, then why do you keep insisting they are doing it wrong? Because everything I've found out about the situation points to them using a well-known method, in well-known ways, over the course of a decade, with access to large amounts of data, and utilizing well-known methods for reducing exactly the issues you and Max are fixated on.

But they still, somehow, have to be wrong, because you, somehow, have to be right.

No, it is not based on the company asking, it is based on the questions being asked, the information you as a participant have available while answering, and the answers the survey is intended to give the people coming up with the survey. In theory Google can screw this up just as much as WotC.

I gave specific issues I have, you cannot just handwave them away by saying 'others manage to have different surveys with a similar approach where they do not run into these problems'. If I tell you my car broke down because of X, you cannot just say 'no it didn't break down, the proof for that is that many cars never break down'... Either finally address them directly, or move on.

EDIT: @Maxperson is going into more detail in their post right below, I am tired of repeating my points over and over again, just for you to always ignore them anyway. They should be clear by now, or at a minimum you can find them if you actually want to address them for a change

The questions they are asking are the exact questions they intend to ask. Your interpretation of their goals with those questions are flawed, as I have stated repeatedly.

The information you have as a participant is fully sufficient to answer the questions they intend to ask, in the manner they intend to be answered.

The issue is not the survey. The issue is you insisting that they are trying to do something they are not trying to do, then declaring the survey broken because if can't do what you are imagining. This isn't "my car broke" and "here is evidence cars don't break" it is "My car broke down, because [X] doesn't do quality control of their vehicles, because if they did it wouldn't have broken down" and "No, they do do quality control, but somethings break anyways, despite that quality control and quality control is not designed to catch every single possible issue."