I dare say that its because it's more effective there? I surmise that AI is quite effective at doing finance task (like identifying data in a pile of document an spitting out an Excel spreadsheet with it), a little less in legal (like the lawyer who used AI without checking and AI invented legal precedents because it wasn't trained on a real precedent database and "learnt" that lawyer-y documents often cite precedents so generated them...) and more difficult to use in visual arts (cause it's difficult to generate consistent content) and even more difficult to write a long, convulted plot with branches like a videogame needs? Adoption should start where AI tools can achieve "profesionnal-grade proficiency" earlier?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AI is stealing writers’ words and jobs…

- Thread starter overgeeked

- Start date

- Status

- Not open for further replies.

lynnfredricks

Explorer

Predictive analysis is one major consideration in a business in which you have a lot of moving parts and moving your milestones can have dire results.Somewhat relevant: In the run-up to this year's Game Developers' Conference, organizers have published a survey of videogame devs (conducted in October 2023) which covered in part the use of gen AI in the workplace. A quote from an article on the topic:

Unsurprisingly, it looks like the business side is more receptive to gen AI than the creator side, at least in the video gaming world.

Also, consider that anyone that is a seasoned professional on the content side is going to be more aware of how new, streamlining tools can impact them. There have been advancements in the content side (effects, 3D, etc) that streamlined and automated some content creation but, didn't otherwise eliminate jobs because the job remained complicated to get cutting edge results. You had to up your game.

But consider now, how companies are adding AI functionality in such a way that it doesn't eliminate seats. Tool makers like Adobe consider this carefully because the content creators play a significant role in tool selection as well as tool operation. Eventually, they add more and more features and put them on subscription, eventually adding in 'seat taking' features that they in turn can charge for in their subscription plan. But they certainly don't want to turn current stakeholders into enemies.

overgeeked

Open-World Sandbox

Good. Here’s to hoping for more.

www.techspot.com

www.techspot.com

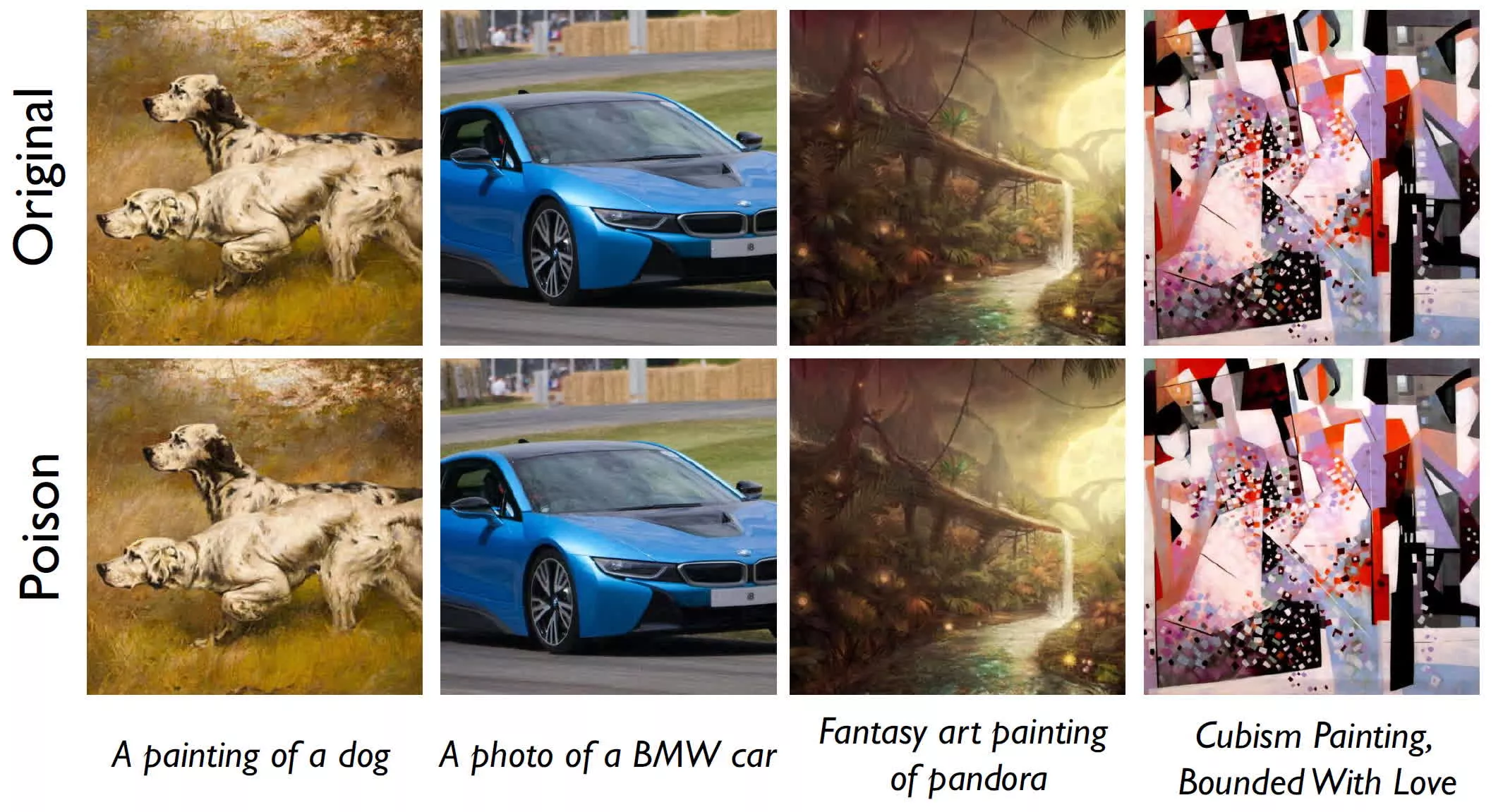

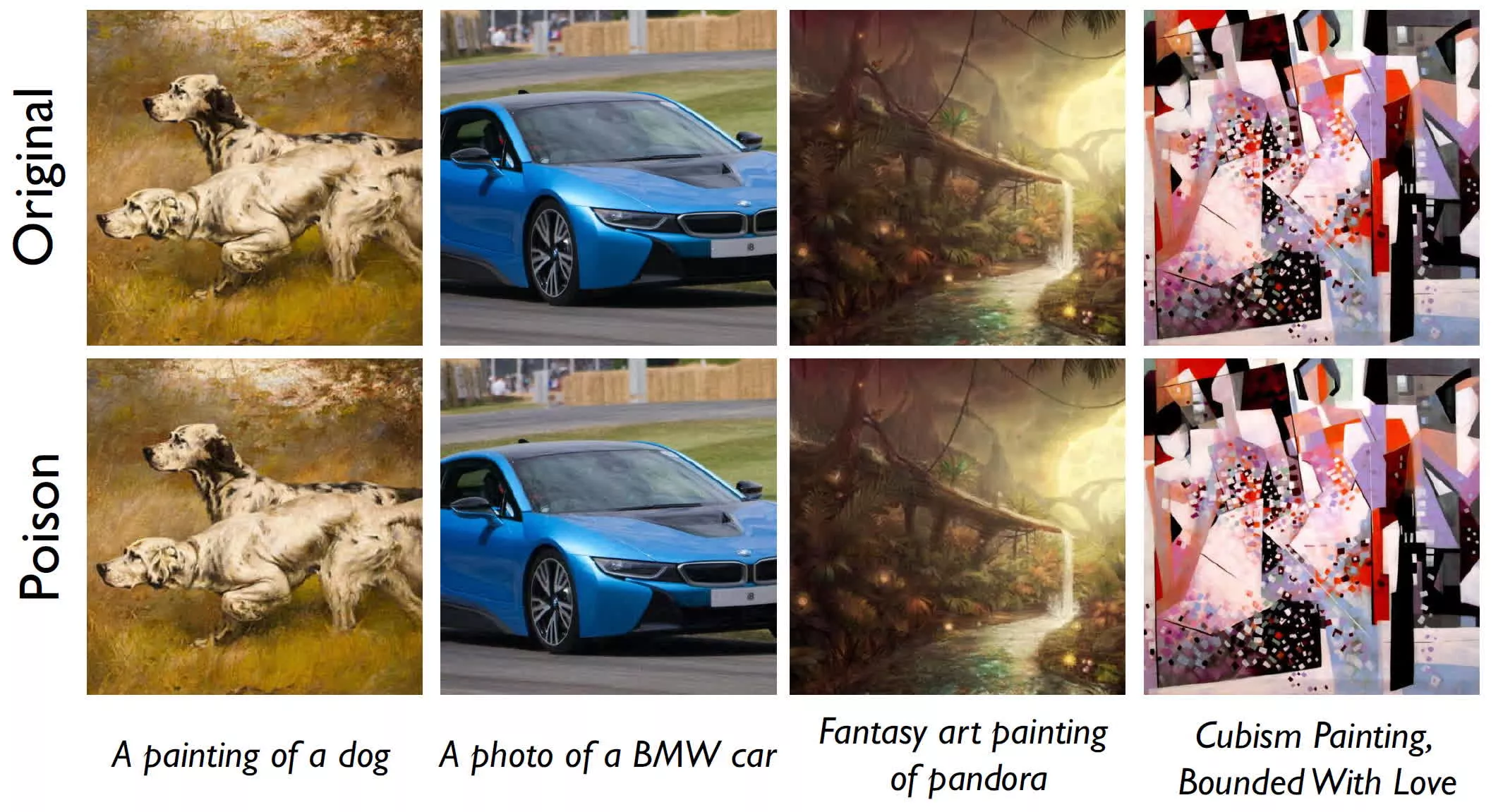

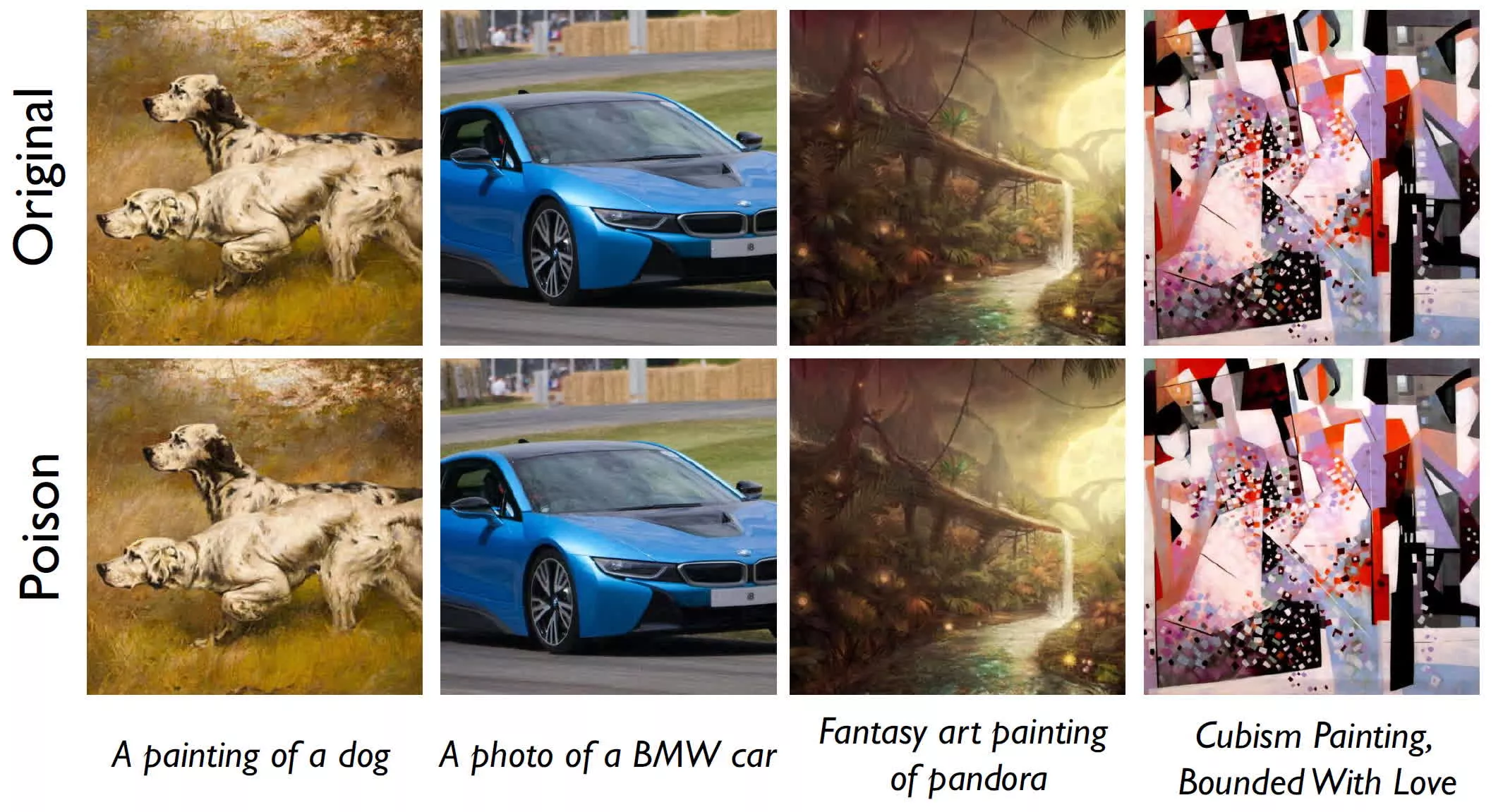

Nightshade, a free tool to poison AI data scraping, is now available to try

Nightshade was initially introduced in October 2023 as a tool to challenge large generative AI models. Created by researchers at the University of Chicago, Nightshade is an...

www.techspot.com

www.techspot.com

trappedslider

Legend

The level of poisoning necessary to ruin future models is fairly unlikely to be achieved. Evidence in support of this expectation includes the fact that the process for adding nightshade is actually fairly complicated; and since nightshade is more about trying to produce some collective result rather than "protecting" individual works, I don't foresee a critical mass of people making the effort to do this. What's more, Any particular model's notion of, for example, a car is going to be more informed by photographs than by digital paintings or illustrations. But the people who are going to be most interested in using this are probably digital artists rather than photographers. (Though it is conceivable that stock image sites might start to make use of a tool like this.)Good. Here’s to hoping for more.

Nightshade, a free tool to poison AI data scraping, is now available to try

Nightshade was initially introduced in October 2023 as a tool to challenge large generative AI models. Created by researchers at the University of Chicago, Nightshade is an...www.techspot.com

Perhaps even more importantly, it seems like it will not be long before someone not only reverse engineers this, but figures out a way to reverse the process. Additionally, it strikes me that scanning for the presence of nightshade would be even more trivial than removing it. If use of this tool became widespread, most companies or organizations training models would probably just set up to scan for it and remove any poisoned images from the data set, or perhaps even remove the poison from the images they want to use. The idea that there would not be enough unpoisoned images left over to do the training seems improbable, especially given that images from before the date of release would be all but guaranteed to be clean.

Finally and perhaps most importantly, even if this "tool" were to be successful in its goals, it would primarily undermine open source models, and therefore further empower Adobe, Getty, Microsoft, and Meta, who have significant existing data sets and would have more resources to curate future ones. So then we would be in a world where paid and censored tools would still get used and artists would still get squeezed by them, except now they have to pay for the privilege of using them if they choose and will be more limited in the work they can use these tools to produce.

Yes, this tools gives a huge advantage to the players who already collected their databases and have the resource to curate them, either by captioning them better (which is apparently, the general idea that has emerged when it comes to bettering models) or generating new images by the way of existing AI to retrain their model and preventing model collapse by human feedback, that they can easily pay for (or use as a captcha so everyone will do that for free). Open-source solutions will be the one targeted by such a solution, if anything.

On the other hand, I am not sure it's worth mentioning that cure will appear (Glaze lasted 24h, and half of the Nightshade is in caption poisining, which is certainly no longer effective (as I don't think anyone is using the original captioning nowadays to train a model..) But honestly, if artists don't want to be trained without their permission, unless you're in a place where AI-training is explicitely allowed as an exception to copyright or not covered by copyright, why use their image? Existing datasets are large enough already, and, unlike LLM, the need for more isn't exactly obvious (at least according to SAI developpers, I am for being competent enough to have an informed opinion in this field). It will kill one of the most common objections against image generative AI, that it is harming the monopoly of existing artists over their creation before its legal term.

Meanwhile,

So artists can indeed use AI.

On the other hand, I am not sure it's worth mentioning that cure will appear (Glaze lasted 24h, and half of the Nightshade is in caption poisining, which is certainly no longer effective (as I don't think anyone is using the original captioning nowadays to train a model..) But honestly, if artists don't want to be trained without their permission, unless you're in a place where AI-training is explicitely allowed as an exception to copyright or not covered by copyright, why use their image? Existing datasets are large enough already, and, unlike LLM, the need for more isn't exactly obvious (at least according to SAI developpers, I am for being competent enough to have an informed opinion in this field). It will kill one of the most common objections against image generative AI, that it is harming the monopoly of existing artists over their creation before its legal term.

Meanwhile,

The winner of a prestigious Japanese literary award has confirmed AI helped write her book

So artists can indeed use AI.

Scribe

Legend

So artists can indeed use AI.

This was never in question.

This was never in question.

Maybe on your part, but I've seen people taking the stance that AI-enhanced art isn't art and True Artists Don't Use AI. Instead of stealing jobs (to refer to the thread title), in helped her to do her job.

trappedslider

Legend

so I was looking at civitai and saw this as part of one model's description "This new model was fine-tuned using a vast collection of public domain images"

CleverNickName

Limit Break Dancing (He/They)

Guy at Work: "You're not worried about AI replacing engineers?"

Me: "Nope."

Guy: "Well you should be. If AI can be trained to do art, it's only a matter of time until we can train them to do your job."

Me: "It'll never happen."

Guy: "What makes you so sure?"

Me: "Because when engineering goes wrong, it goes very wrong. Like, people can die, property can be destroyed, lives can be forever changed. And when that happens, we need someone to be held accountable. We need someone to sue."

Guy: "And you can't sue an AI."

Me: "Right. There's no legislation to hold AI accountable for mistakes, because that would mean a licensure process, and insurance, and focused training--"

Guy: "--which engineers already have. That would defeat the purpose of even having an AI in the first place."

Me: "Exactly. And that's why I'm not worried."

Me: "Nope."

Guy: "Well you should be. If AI can be trained to do art, it's only a matter of time until we can train them to do your job."

Me: "It'll never happen."

Guy: "What makes you so sure?"

Me: "Because when engineering goes wrong, it goes very wrong. Like, people can die, property can be destroyed, lives can be forever changed. And when that happens, we need someone to be held accountable. We need someone to sue."

Guy: "And you can't sue an AI."

Me: "Right. There's no legislation to hold AI accountable for mistakes, because that would mean a licensure process, and insurance, and focused training--"

Guy: "--which engineers already have. That would defeat the purpose of even having an AI in the first place."

Me: "Exactly. And that's why I'm not worried."

trappedslider

Legend

So, I went looking and found that

Also seems that the generation that grew up on napster/limewire/pirate bay learned that maybe paying for stuff isn't so bad after all.

was only on one model but hosted on several different websites. At this point I honestly believe it's too late to do anything regarding images.so I was looking at civitai and saw this as part of one model's description "This new model was fine-tuned using a vast collection of public domain images"

Also seems that the generation that grew up on napster/limewire/pirate bay learned that maybe paying for stuff isn't so bad after all.

- Status

- Not open for further replies.

Similar Threads

- Replies

- 27

- Views

- 1K

- Replies

- 30

- Views

- 4K

- Replies

- 73

- Views

- 7K

- Replies

- 65

- Views

- 10K

- Replies

- 13

- Views

- 3K

Recent & Upcoming Releases

-

June 18 2026 -

October 1 2026