Recently I had a discussion with some people about the Ubisoft NPC AI. My issue is the inherent way LLMs work, the predict how a sentence should look like without really understanding what it says. It's designed to give a believable answer with the information it has or make up a believable answer. The issue there is that it doesn't know a 'lie' from 'truth', it's all the same to it. These hallucinations as they are called can be mitigated with things like RAG and making very specific datasets, but they've yet to be completely eliminated.

Example: The High Paladin of Justice and Truth sends you on a quest to recover the Crown of Might in the evil dungeon of DOOM(tm). 3% chance there is no dungeon of DOOM(tm) and another 3% chance that there is no Crown of Might. Lucky you, there is an evil dungeon of DOOM(tm), but after 27 sessions you still haven't found the Crown of Might, you've searched the dungeon three times already. You ask the AI DM: Are you sure there is a Crown of Might? AI DM: My apologies, I was mistaken, there is no Crown of Might, but there is a Lantern of Hope... Hint: There is also no Lantern of Hope...

This happens, how do you feel? You wasted 27 sessions. You'll never ever trust what the AI DM will say ever again. You might even feel so wronged that you quit pnp RPGs entirely...

I don't mind NPCs lying or giving the wrong info, but a chance of 1:33 for every generation is made up on the spot and false? Especially when we AND the AI DM don't know when they lie... Yeah that's not going to work without some human intervention.

Don't get me wrong I'm a very big proponent op AI/LLM, but I do realize that it's a tool not a level 5 self-driving car. It's a great tool for a DM to get creative and get a lot of work done in a short amount of time. But as a fully standalone AI DM LLM might not be a good or even acceptable solution, not now, maybe never. When I look at what RAG does for example (not in ChatGPT4), it takes more resources and makes the model even more unpredictable because you get tons of parameters to tune. At what point does the 'solution' become more costly then just hiring a good human DM? When the cheapest level 5 self-driving car would cost $2 million, wouldn't it be cheaper just to hire someone to drive you around?

It kinda seems that people want to use AI/LLM as a hammer and every problem is a nail...

Source:

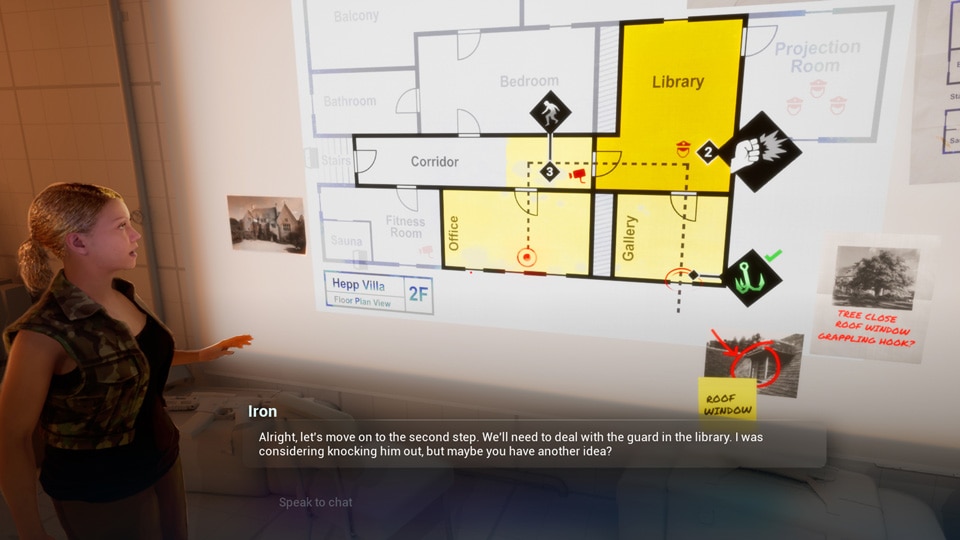

Have you ever dreamed of having a real conversation with an NPC in a video game? For the last few years, a small R&D team at Ubisoft’s Paris studio have been experimenting with generative AI in a first step toward making this dream a reality.

news.ubisoft.com

chat.openai.com

chat.openai.com